You go online and find the new ChatGPT AI bot. After chatting with it for a while, you have shared all kinds of personal information. Perhaps you mentioned your name, the names of some colleagues, family, and friends, your interests, where you work, and more. Even before you started talking, ChatGPT already had your phone number and email address from the sign-up process, when you agreed to its terms of service, which you didn’t read in full.

Now, a few questions inevitably arise. Did the AI bot store all of that information? Is your data safe? Will the AI share it with other users? And how does it use your data?

I set out to write a normal story that would answer these and other questions. Little did I know that I would embark on a long journey ripe with contradictions fueled by a lack of transparency and a general understanding of how the tech works.

ChatGPT privacy concerns are mounting

Companies like J.P. Morgan, Amazon, and Walmart have restricted and warned their employees against sharing sensitive business data with ChatGPT. According to Insider, an Amazon corporate lawyer told employees that the company has already seen instances of ChatGPT responses that are similar to internal Amazon data. So how did the AI bot get this information? And should individuals be worried about sharing their sensitive data with ChatGPT?

To get to the bottom of this, I reached out to data privacy experts from the United Kingdom and the United States, as well as top-level executives from AI organizations and cloud platforms. What follows is the search for elusive data privacy answers.

The privacy trade-off

Jamal Ahmed is dubbed by the BBC as the “King of Data Protection.” As a global privacy consultant at Kazient Privacy Experts, Jamal is professionally experienced with data privacy. I asked Jamal whether he was concerned about data privacy risks related to ChatGPT and similar models developed by Microsoft, Google, You.com, and others.

“Concerned? You betcha!” Ahmed said. “While ChatGPT and its ilk promise the world, it’s crucial to remember that there’s no such thing as a free lunch. And, in this case, the lunch could be your data privacy.”

It’s crucial to remember that there’s no such thing as a free lunch. And, in this case, the lunch could be your data privacy.

According to Ahmed, AI chatbots have opened the digital Pandora’s box. Both Open AI’s privacy policy and Microsoft’s privacy policy — as well as other documentation — have been criticized for their lack of transparency and vague language. And while the companies assure users they do not save what gets typed into the chatbot, experts disagree.

“When you share information with ChatGPT or similar AI models, you’re essentially contributing to the system’s learning process,” Ahmed told me. “This data is stored in secure data centers managed by the respective companies.” Ahmed went on to warn me about the need for vigilance. “While these organizations claim to strive to anonymize and protect user information, the access to this data can still vary. It’s important to remember that, in some cases, user data can be linked to individual identities.”

Who might get access to your personal data?

In Chicago, Illinois, Debbie Reynolds, a global data privacy and protection expert strategist, has become a leading voice in the world of data privacy and emerging technologies. Debbie frequently appears in US mainstream media like PBS, Wired, Business Insider, and USA Today. I came across her work during my research and reached out for some insight.

By the time I spoke to Reynolds, I had many more questions than when I had started my investigation. Could personal data be at risk if ChatGPT were breached? Could law enforcement issue warrants to obtain personal information? Does ChatGPT save chat history despite claiming that it doesn’t?

“The answer would be, Yes. Yes to all of that,” Reynolds told me over the phone. “Some companies are trying to find ways to minimize their risk. If they had a breach, they might have policies that make sure that the data that goes into the model (ChatGPT) is less personally identifiable.”

“Anything that a tech company collects about a person, as long as they store it in terms of what their business model is, can be requested by law enforcement,” Reynolds added.

Anything that a tech company collects about a person, as long as they store it in terms of what their business model is, can be requested by law enforcement.

She also highlighted the importance of the agreement that users must digitally accept to access ChatGPT. “In basic terms, most of these companies, when you sign up to use their products and put in information, the information basically belongs to them. So you don’t have a lot of control over what they do with the data after they receive it.”

Is ChatGPT being trained on the information you share with it?

One of the guardrails coded into ChatGPT exists specifically to prevent the AI model from using the information that a user types to create responses for other users. But ChatGPT admits that the chatbot does save chat history throughout individual conversations.

“They’re trying to make it so that they are not actually regurgitating that information back out,” Reynolds explained. “So when a user puts in something in the prompt, they try to filter that information out so it won’t be used.”

When I asked Sagar Shah from Fractal.ai for his opinion, he jumped straight into cybersecurity and privacy risks. “As it pertains to the privacy policy, OpenAI’s training protocol could be problematic,” said Shah. “ChatGPT has been fed books, articles, websites, and, most glaringly, the info every user gives the bot via the questions they ask.”

“People may very well be handing over sensitive info, proprietary content (e.g., code, essays, prose), as well as user IP addresses, browser type, settings, and data on users’ interactions with the AI,” he added.

Are there any laws that protect the data you share with ChatGPT?

Laws and regulations always tend to be one step behind technology and innovation. And while a wide range of laws apply to data privacy, the AI sector is still in a grey area. One question I had was what happens if law enforcement officials ask for a user’s data.

“This is a new tool that creates a lot of new legal issues that people haven’t looked at yet,” Debbie Reynolds explained. “And so, I have not yet heard anyone in law enforcement requests data from chatbots yet.”

I emailed OneTrust to get their take on the issue and received the following reply from Blake Brannon, their Chief Product & Strategy Officer. “While copyright ownership and trade secret protection are important concerns for businesses using AI technology, there are more fundamental issues to consider, especially with upcoming regulations like the AI Act.”

The AI Act is the first law on AI, proposed by regulators in Europe, a region leading the world in technology-related laws. As of March 2023, the first draft of the law is being introduced and debated in the European Parliament.

Under the law, ChatGPT and similar AI technologies will have to undergo external audits. These audits will check for standards in their performance and safety. “This regulation (AI Act) will govern what data can be used to train AI tools and what level of explicit consent must be given by individuals if personal data is being used to train the AI,” Bannon explained.

To comply with the law, organizations would have to manage and govern what their teams are using AI technologies for. It may also require organizations to ask for explicit consent from their users. Transparency, including the disclosure of what types of AI they are using and how they are ensuring the AI is compliant, will also be essential.

“It’s important for businesses to be aware of these issues and take steps to ensure they are in compliance with regulations and ethical considerations related to AI,” Bannon said.

How will companies use ChatGPT and mitigate privacy risks?

Recently, Microsoft announced it was expanding its ChatGPT portfolio with a wide range of enterprise solutions for companies to integrate ChatGPT into their systems. Other companies like Salesforce also unveiled plans to integrate a chatbot into Slack. And several leading technology companies, including Snap and Discord, have been racing to integrate generative AI into their consumer-facing products and tools.

Under this trend, I wondered how companies would be able to leverage the potential of ChatGPT while safeguarding their sensitive data and mitigating risks.

“I would tell a client, customer, or business peer that they should carefully evaluate the potential benefits of using AI in their operations,” Sagar Shah said. “Companies should consider improved efficiency, increased customer engagement, and cost savings, but also be transparent in making sure they know the risks — such as data privacy and security vulnerabilities.”

I met Madhav Srinath, CEO/CTO of NexusLeap, at a virtual AI conference a few days before I began writing this report. I was curious to know what he had to say about companies that want to integrate AI chatbot technology into their operations.

“By utilizing the cloud, organizations can seamlessly integrate AI models like ChatGPT into their products in a modular fashion,” Srinath said. “This approach enables them to stay at the forefront of the rapidly progressing tech ecosystem while maintaining data privacy, security, and scalability.”

“Companies need to ensure that any data going in and out of a tool like ChatGTP is locked down and protected,” said Srinath. “Companies have more control over chatbots they build themselves, but it doesn’t necessarily mean it will be inherently safer. They have to intentionally set those guardrails and boundaries themselves. It’s very possible for a company to build a chatbot that’s far less secure than ChatGPT, even though the latter is publicly accessible.”

“When people started using Google Translate,” Debbie Reynolds told me, “especially when they were trying to use it to translate legal documents or contracts that are confidential in nature, organizations developed their own in-house translation apps in closed systems. So when they translate, their information isn’t going out into the internet.”

It seems that with ChatGPT, companies will need to determine not only the benefits of using the technology but also the risks and then decide how they want to use it.

“In the future, companies may be able to create their own internal AI chatbots which are not connected to the internet and only use the information within the organization,” Reynolds told me. “So I think that’s a good thing, and it will be very popular among companies.”

In the future, companies may be able to create their own internal AI chatbots which are not connected to the internet and only use the information within the organization.

Jamal Ahmed also weighed in on how companies should use ChatGPT. “For clients considering the adoption of ChatGPT or similar models in their operations, I recommend embracing their inner data privacy superhero and taking a comprehensive and thoughtful approach. This includes conducting a thorough risk assessment, developing robust data protection policies, and maintaining transparency with users — championing data privacy with a mix of professionalism and vigilance.”

I interviewed ChatGPT on data privacy. Here’s what it said.

I dived into everything I could find online regarding Open AI’s AI standards, its privacy policy, and any related information on how the AI chat works. While I found no specifics for most questions, Microsoft, unlike OpenAI, assures that the Bing AI ChatGPT is not using information that users type in to train it further.

However, everyone I was talking to, including data privacy experts from the US and the UK, strongly disagreed. This left me perplexed and wanting to get to the bottom of the issue. Then something very interesting happened.

While searching for more information, I decided I should go directly to the source. Maybe the ChatGPT AI bot itself would have something to say on the subject. After all, if anyone had the answers about how ChatGPT worked, it would be the AI app itself. So I decided to interview it.

The ChatGPT version I talked with is provided by OpenAI. Here’s what happened.

Does ChatGPT have a history of my chats?

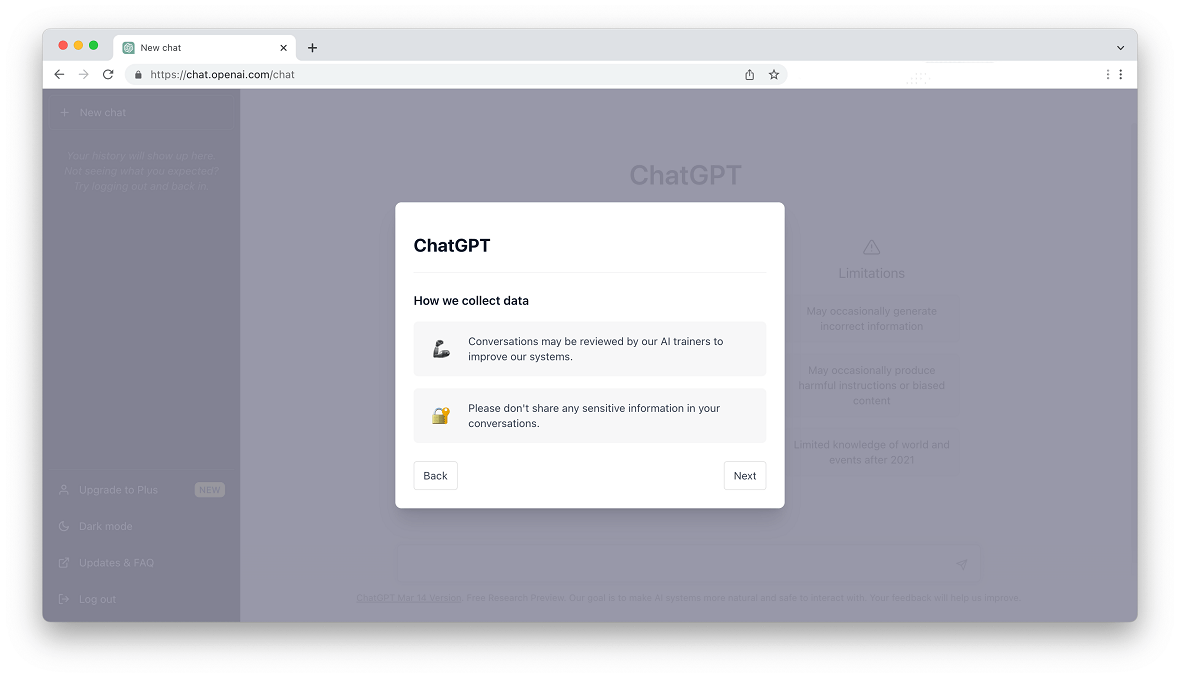

Before I even began chatting with the app, a warning appeared on the screen, stating, “Conversations may be reviewed by our AI trainers to improve our system. Please don’t share any sensitive information in your conversation.” I wondered how many users paid attention to that message or understood the privacy risks involved.

I moved on and decided to get straight to the point. I started out with an easy question: “Where do you store the information that I type?”

“As an AI language model, I do not have the capability to store information on my own,” answered ChatGPT. “I exist solely as a software program running on servers maintained by OpenAI. When you type information to me, it is transmitted over the internet and processed by the servers that host my program. OpenAI takes user privacy and data security very seriously, and they store your information in a secure manner in compliance with industry-standard security protocols.”

Ok, I thought, maybe I should take things up a notch. “Do you have a history of my chats with you?” I asked.

“As an AI language model, I do not retain any memory of our previous conversations unless you explicitly choose to save a copy of the chat,” ChatGPT replied. “When you use me, your chat history is stored on the device or platform where you are using me, such as your computer or smartphone, or the messaging platform you are using to communicate with me. OpenAI does not retain any user data or chat history beyond what is necessary to provide the service, and any data that is collected is treated with the utmost respect for user privacy and security.”

It seemed clear that the AI app was giving me somewhat generic privacy policy answers, which contradicted the initial privacy warning.

Is my information safe with ChatGPT?

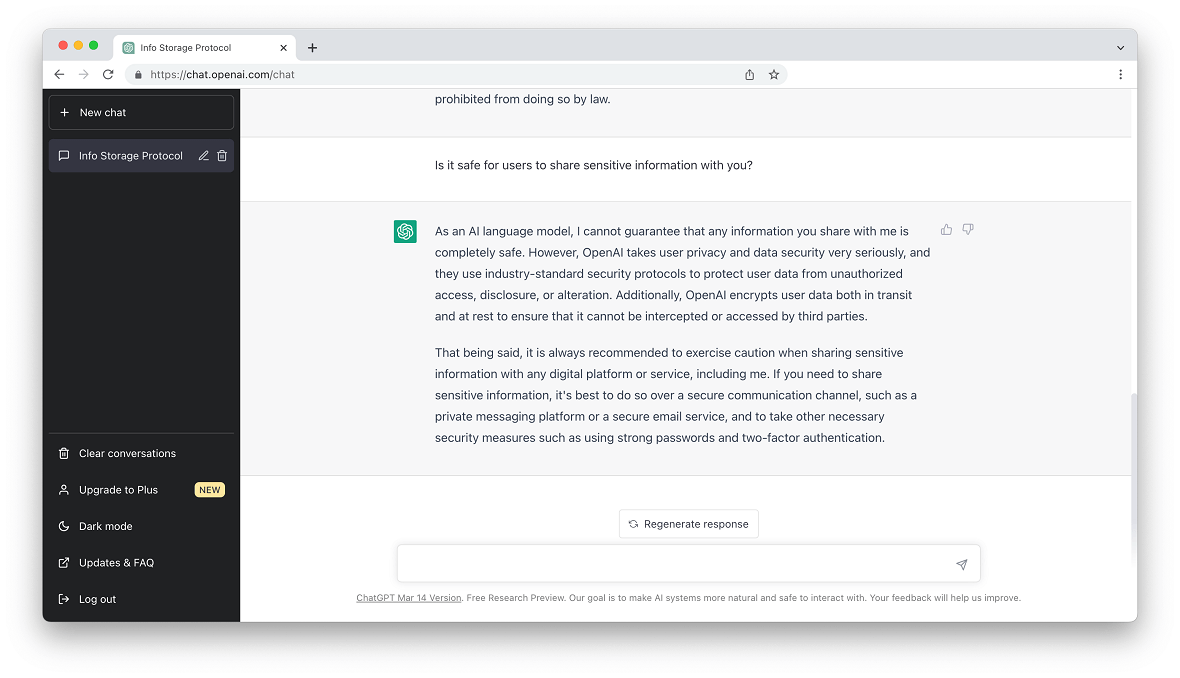

When asked directly if the information I shared with it was safe, ChatGPT was less reassuring. “As an AI language model, I cannot guarantee that any information you share with me is completely safe.”

And then it said, “However, OpenAI takes user privacy and data security very seriously, and they use industry-standard security protocols to protect user data from unauthorized access, disclosure, or alteration. Additionally, OpenAI encrypts user data both in transit and at rest to ensure that it cannot be intercepted or accessed by third parties. That being said, it is always recommended to exercise caution when sharing sensitive information with any digital platform or service, including me.”

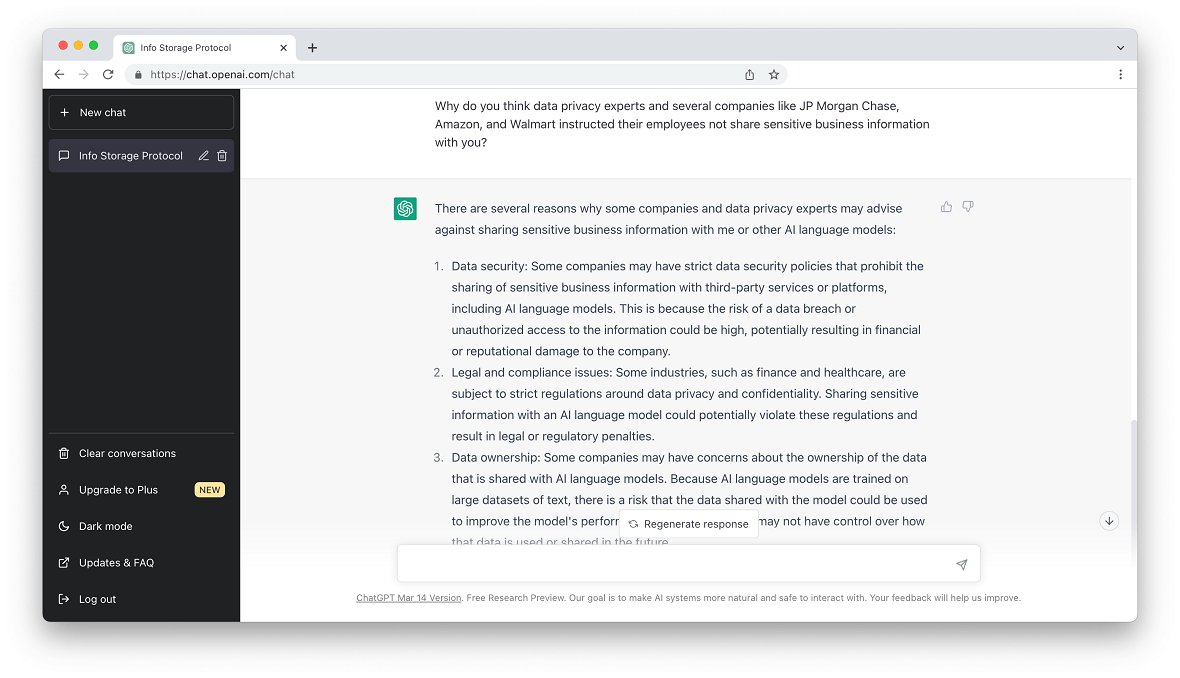

”Why do you think data privacy experts and several companies like J.P. Morgan Chase, Amazon, and Walmart instructed their employees not to share sensitive business information with you?” I asked ChatGPT, pushing harder.

“There are several reasons why some companies and data privacy experts may advise against sharing sensitive business information with me or other AI language models,” it replied.

Then it listed data security, legal and compliance, and data ownership standards that companies apply to mitigate the risks of sharing sensitive data with third-party providers. Once again, its answers were generic, and the bot did its best not to specifically address the question I had asked.

Do real people see my chats with ChatGPT?

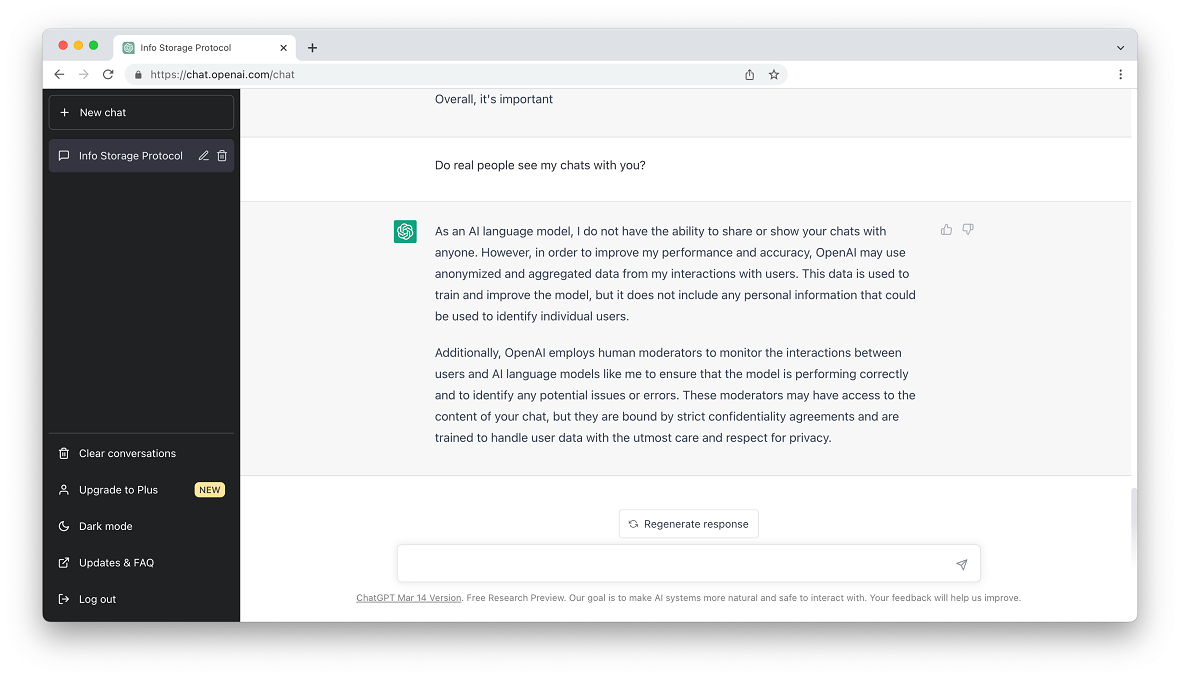

Later, ChatGPT explained to me how the company uses anonymized and aggregated data from chat interactions with users to train and improve its model. It also assured me that the data used for this purpose does not include any personal information “that could be used to identify individual users.”

However, The Telegraph recently reported that in the case of the Microsoft Bing chatbot, staff members are reading users’ conversations with its Bing chatbot. A Microsoft spokesman told the media, “To effectively respond to and monitor inappropriate behavior, we employ both automated and manual reviews of prompts shared with Bing.”

ChatGPT also admitted that human moderators access its chat data, stating, “OpenAI employs human moderators to monitor the interactions between users and AI language models like me to ensure that the model is performing correctly and to identify any potential issues or errors. These moderators may have access to the content of your chat, but they are bound by strict confidentiality agreements and are trained to handle user data with the utmost care and respect for privacy.”

What is OpenAI doing to protect my data?

OpenAI denies that they review conversations or use the info typed into ChatGPT to train the AI. They also have processes to delete chat history and data in bulk and urge users not to share personal information. But for some reason, the chat itself often has a hard time answering simple privacy questions and gives generic, elusive answers.

I wanted to know if the developers were working to mitigate the privacy risks of ChatGPT, so I asked, “Is OpenAI currently working to strengthen your privacy features? And how much does privacy policy restrict your daily operations regarding what you can research, say and learn?”

“Yes, OpenAI is constantly working to strengthen the privacy features of their language models, including me,” the AI chat replied. “OpenAI regularly reviews and updates its security protocols to ensure that they remain effective against emerging threats and to improve the protection of user data.”

ChatGPT explained its ethical standards, privacy principles, and how it is coded to prevent malicious use of the app, bias, and harmful content.

“Overall, OpenAI is committed to ensuring that their language models, including me, are used in a responsible and ethical manner and that user privacy and security are protected to the greatest extent possible,” it said.

Still, I wonder. Is privacy a priority for OpenAI? And what about all the other AI chatbots that are being released out into the world?

One thing has become clear to me. The AI conversation on data privacy and security is far from over. In fact, it is just beginning. Hopefully, we will have this necessary debate before a serious data privacy breach with significant consequences takes the world by surprise. How will it all play out? No one, AI or otherwise, has the answer.