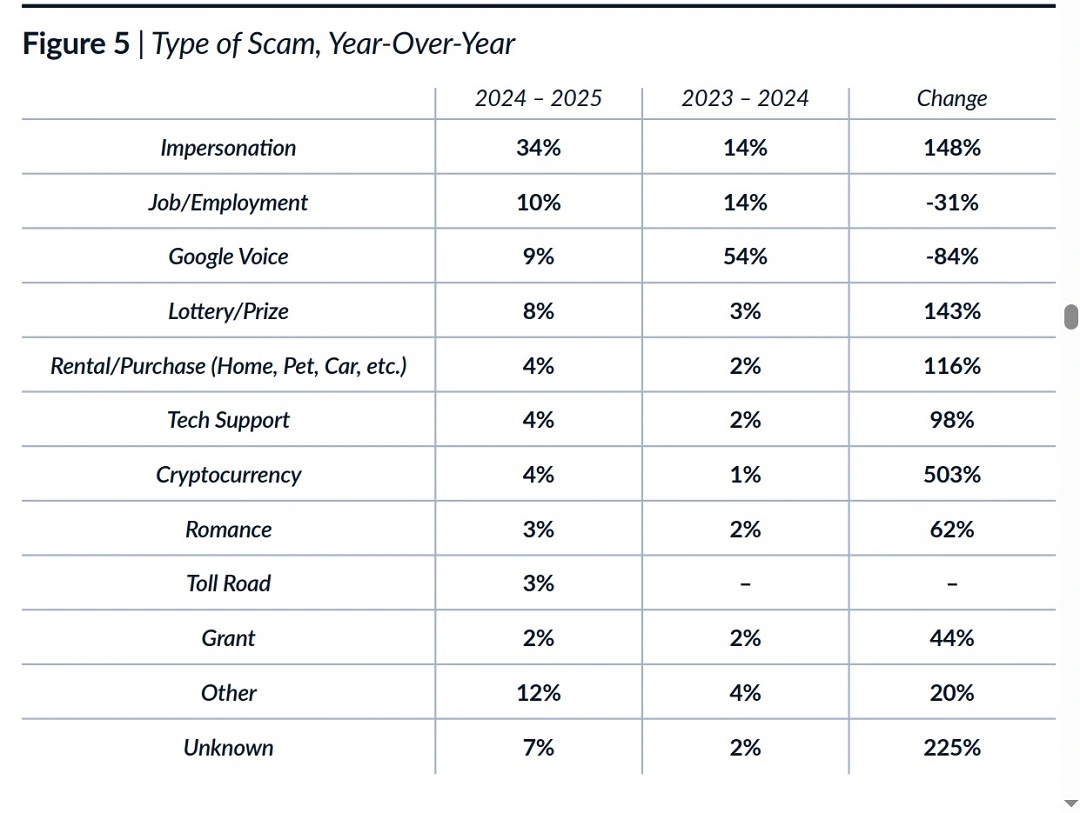

A new report has found that AI impersonation scams are up by 148%. While the total amount of victim-reported cybercrimes has dropped compared to last year, those affected are seeing more significant losses.

The report suggests that the lower reported numbers of crimes and higher losses have been driven by AI, a category of tech that allows bad actors to target victims more effectively, no longer needing to cast wide nets.

We analyzed the report and spoke to experts to answer what has changed with AI impersonation and what users need to know today.

Impersonation scam trends in 2025 and the shift in strategies and technologies

The ITRC 2025 Trends in Identity report found that cybercriminal gangs are reinventing and modernizing their scams using AI.

Impersonating known organizations and brands has always been a go-to technique used by cybercriminals, as victims let their guard down and are more likely to fall for scams when they believe they are engaging with companies or organizations they know.

In 2025, impersonation continues to play a leading role among scammers. However, things have changed. Scammers are now using AI extensively to develop, enhance, and scale these types of cyberattacks.

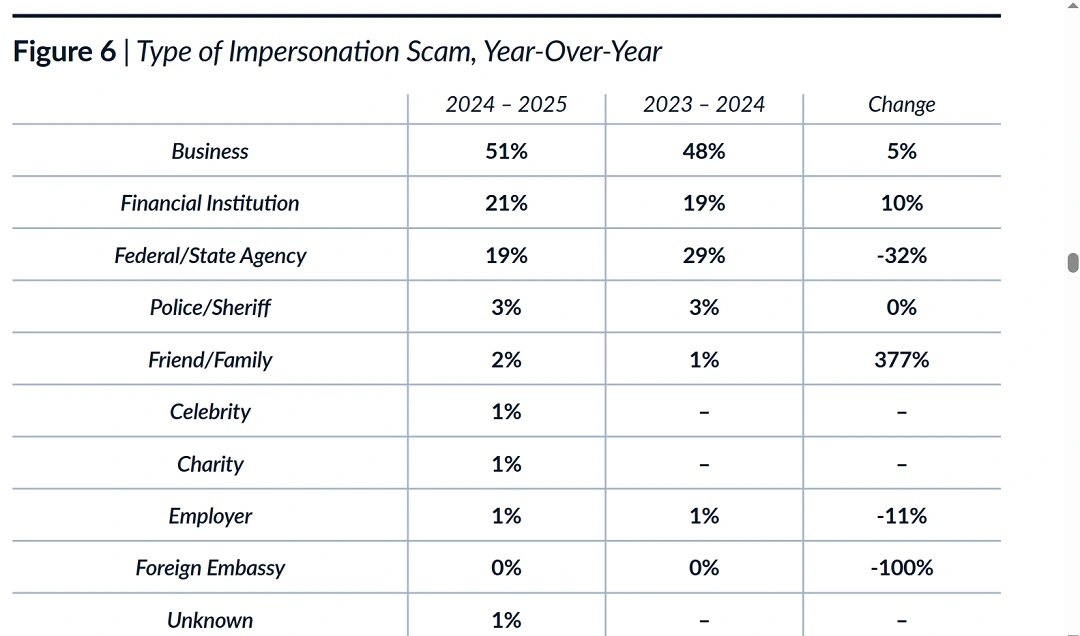

Reported crime data for the year reveals that more than half (51%) of all scammers are impersonating businesses, 21% impersonate financial institutions, and both scam categories are on the rise.

Government impersonation, whether it be a federal or state agency, was set at 32% of all reported crimes. Believe it or not, this represents a decrease compared to the same timeframe in the previous year.

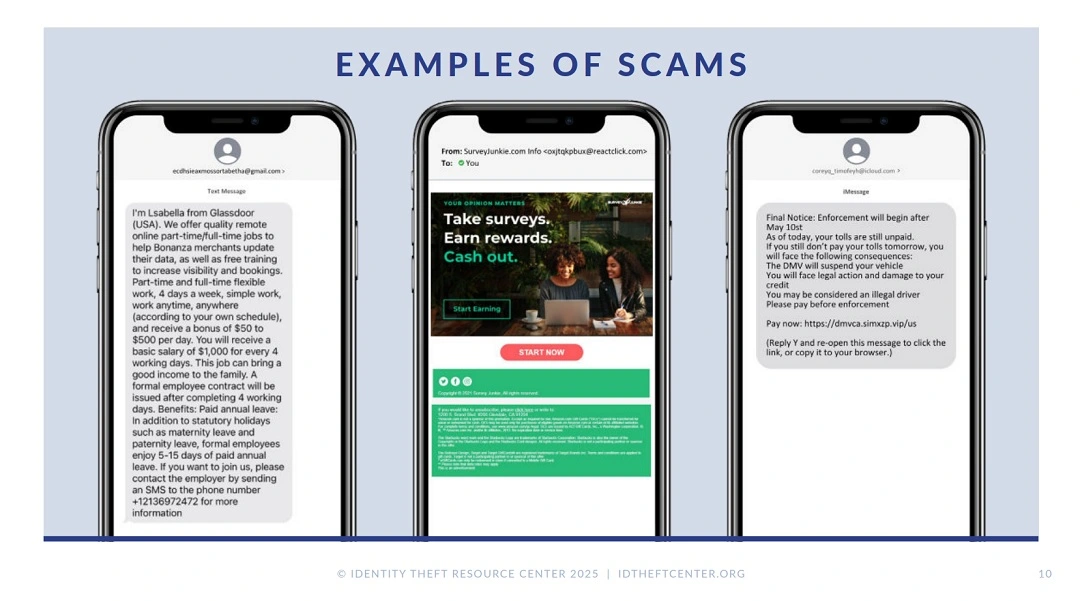

Email spoofing, fake websites, malvertising, and over-the-phone phishing are the most-used channels by scammers now.

Moonlock has observed similar trends in scams affecting other countries beyond the United States, including European and Asian countries and the United Kingdom.

Toll road scams have made a huge comeback, driven by the industrialized nature of cybercriminal groups like Smishing Triad, which operates from Asia and leverages state-of-the-art technology and new technical advancements like AI, combined with compromised accounts and stolen data to make attacks more effective.

The advancements in technology by these groups are translated into hard statistics. For every 33 scams, 1 is now a toll road scam.

What has changed with AI impersonation scams? Experts answer

We asked cybersecurity experts what has changed from a user’s perspective with AI-driven impersonations and what users need to know right now.

“AI tools today can mimic voices, faces, and even writing styles within minutes, making fake emails, texts, and calls feel almost real,” Fergal Glynn, Chief Marketing Officer and AI Security Advocate of Mindgard, told us.

Scammers usually pretend to be well-known tech firms, banks, or delivery companies, and spread their fraud through emails, SMS, social media chats, and deepfake audio or video calls, said Glynn.

According to Glynn, the smartest defense for users is to slow down, double-check the sender using an independent source, and keep multifactor authentication enabled across all your accounts. “Stay alert, it’s your best armor,” he said.

Before you click, take 9 seconds to think

Jeff Le, Managing Principal at 100 Mile Strategies, a public sector consultancy company and a Fellow at George Mason University’s National Security Institute, told Moonlock that a major area of focus has been impersonations of tech companies focused on payments, including Amazon, PayPal, Apple, credit card companies, and even Google/Meta password requests for privileged access.

Le added that pausing for a couple of seconds is vital for users. Public organizations like Take9 — a public service campaign that encourages individuals to pause for 9 seconds before clicking on links or downloading files — are working for a broader digital literacy. The goal is to get users to focus on pausing before pushing, Le said.

Kris Jackson, SVP, Director of Security Engineering and Operations at BOK Financial, told us that users should be aware that scammers are sending out messages (SMS, emails, or others) precisely tailored for them.

“The era of clumsy, poorly written ‘Nigerian prince’ scams is over,” said Jackson.

FANG impersonations (Facebook, Apple, Netflix, and Google), along with Microsoft impersonations, account for nearly half of all attacks, Jackson added.

“If an email creates a sense of urgency, especially one pushing you to click a link, take a moment to verify that everything is legitimate before taking action,” he said.

How far can AI hyper-personalization go?

AI allows cybercriminals to leverage hyper-personalization and take a scam further than victims have ever seen before.

“Scammers may pretend to be your friend, your grandkid, or a recruiter offering you a job,” Tim Marley, founder of Prism One, a cybersecurity solutions provider, told Moonlock.

“This is showing up a lot in the job market with fake interviews, phony onboarding processes, and people getting scammed out of money before they realize it wasn’t real,” Marley said.

AI tools are making it easier for attackers to sound convincing, and this can be especially hard on vulnerable groups like seniors or those actively looking for work, Marley added.

Cybercriminal scammers shift focus away from purely digital attacks to real-world crimes

The ITRC report shows that scammers have also shifted when it comes to what sensitive personal information they are stealing. This shift is a clear indication of what types of crimes will follow.

In the report, data fields like Name, Phone number, Address, and Social Security number, which have always been criminals’ favorites, have maintained their rates. But, strangely enough, scammers’ interest in login information (username and passwords) has decreased by 15%.

Interestingly, there was a 612% increase in crime reports related to stolen birth certificates, a 359% increase in stolen medical insurance card data, and a 139% increase in payment card number data. Stolen passports are also on the rise, peaking by 67%, and checkbooks and checks saw a 654% increase. Personal data found on the dark web has also surged by 865% compared to the previous time period.

Cybercriminals who steal victims’ data or digital copies of documents often turn to the dark web to sell what they steal and make a quick buck before engaging in crimes using the stolen data.

Compromised passports, ID documents like birth certificates, and financial data are hot commodities and sell fast on the dark web, often in bulk. This type of data can be used for digital crimes but also for real-world criminal activities.

Data sold on the dark web, driven by the demand of countless cybercriminal groups and threat actors, feeds a never-ending cycle in which breached victims are targeted over and over again.

Are cybercriminal scams and fraud really down?

As mentioned, the ITRC 2025 Trends in Identity report found that the number of reported crimes is down in the U.S. compared to other years. Attributing the downtrend to factors like AI-driven effective victim targeting and victim fatigue.

Victim fatigue is a term that refers to the emotional and psychological exhaustion that victims experience, impacting their willingness to report crimes. In this case, victim fatigue is caused by the normalization of digital crime, the continual and relentless nature of cyberattacks and scams, and frustration or lack of information on crime reporting systems.

Why are victims not reporting impersonation crimes?

We asked experts if they agreed with the ITRC’s conclusions on reported crimes.

Hyper-personalization means fewer victims spot or report these scams, Glynn from Mindguard said. Fewer reported crimes lead to a false sense of calm, while the threat quietly grows more dangerous and increasingly sophisticated, said Glynn.

“Victims often feel ashamed, unsure if what happened is even a crime, or skeptical whether the officials can help,” he added.

“Cybercrime is clearly up,” Le from 100 Mile Strategies added, citing $16 billion in losses to cybercrime as expressed in a recent FBI report.

“Reporting structures are overwhelmed, users may not want to take the time to reach out to law enforcement, and also, communities may experience shame or even limited English language proficiency,” said Lee.

On the other hand, Jackson from BOK Financial agreed with the ITCR report and the idea that fewer cybercrimes are being reported due to victim fatigue and the increasing precision of attacks. However, Jackson added that victims may also be growing desensitized.

“These incidents are becoming so common that they feel less urgent or shocking,” said Jackson.

“There’s also a growing sense of futility around reporting. Many believe that even if they do report, the chances of the crime being investigated or the perpetrator being held accountable are slim.”

Final thoughts

Several things have changed in the past few years in the online scam scene. Scammers are now highly organized criminal enterprises, often acting from foreign locations using the most advanced technology on the market.

These criminal groups also offer automated scamming services to other cybercriminal gangs or bad actors under malware-as-a-service (MaaS) models, effectively lowering the technical bar for those willing to cross the line into a life of cybercrime.

Additionally, the ITRC report shows that AI impersonation drives hyper-personalization, increasing precision targeting and attack success rates. AI hyper-personalization also seems to be playing a factor in why victims are not reporting crimes. The more personal a cyberattack feels, the greater the emotional stress will be, leading to shame or fear of possible escalation.

Simple techniques like stopping for 9 seconds before clicking, responding to a phone call, or answering an SMS are being incentivized by organizations because they are so effective. Knowing how scammers continually change their MO allows any and all users to stay one step ahead. Additionally, keeping up to date with tips provided by security industry experts on how to stay safe can help users regain control of their digital environment.