On February 7, a bad actor posted on Breach Forums advertising that they were selling leaked data from 20 million OpenAI users. The actor, going by “EmirKing,” provided a sample of the data on sale, which included credentials. Less than a week later, we can now answer the question: Was this breach and data leak true or not?

When EmirKing’s post became mainstream, picked up by technology media, several things happened. For one, OpenAI told the media that they took these types of incidents “very seriously.” The company then initiated an investigation.

Simultaneously, cybersecurity researchers attempted to verify the veracity of the hackers’ claim. They subsequently found strange issues that led them to believe the bad actor’s claims were probably not true.

After a deep-dive analysis of the data and the claims of the threat actor by threat management company KELA, it appears that the alleged hack is not entirely true. However, the leaked credentials and email addresses are authentic. How can this be? In this report, we answer that and other questions.

The 20-million-user ChatGPT data leak is real, but it did not come from OpenAI

It’s been a couple of days since EmirKing announced the data of 20 million OpenAI users was for sale. This included passwords, and the data was for sale for just a few dollars.

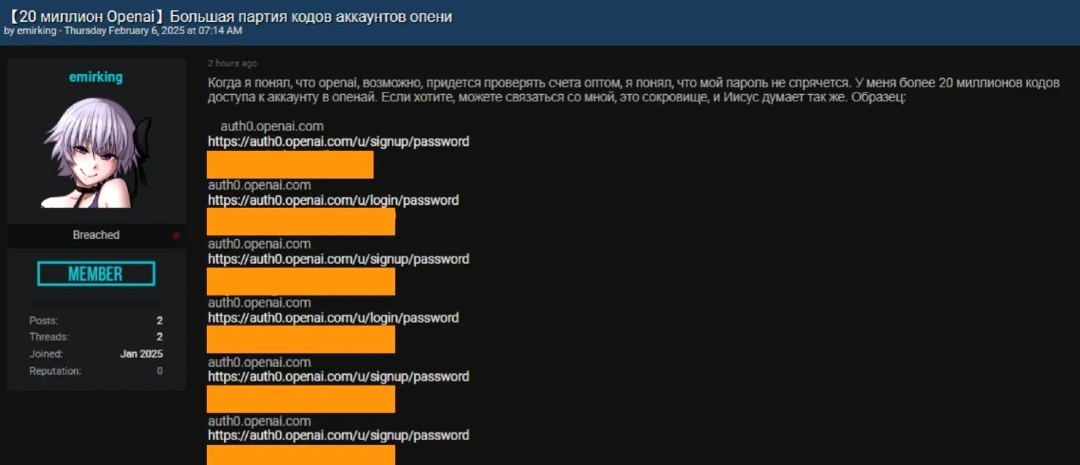

EmirKing’s post, written in Russian, is translated as follows:

“When I realized that OpenAI might have to verify accounts in bulk, I understood that passwords wouldn’t stay hidden. I have more than 20 million access codes to OpenAI accounts. If you want, you can contact me — this is a treasure.”

Strangely enough, unlike others in Breach Forums, EmirKing had opened their account very recently. The account was used almost exclusively to promote this leak. Under his username, EmirKing only had one other post, and their relationship with other cybercriminals on the platform was low to nonexistent.

These are all clear signs that instantly raise suspicion about the veracity of his claim. Usually, the more respected hackers on Breach Forums have been on the platform for years and actively post about a variety of topics while interacting with other individuals and gangs.

KELA cross-checked the leaked OpenAI user data and found this

On February 10, KELA reported that they had cross-referenced the data sample in the EmirKings post. KELA found that the leaked data was not a result of OpenAI being breached but the work of stealer malware attacks and existing leak databases combined.

What does this mean? It means that the threat actor never hacked or breached OpenAI systems. Rather, they used previously leaked databases found underground, which cybercriminals sell, buy, trade, or provide free access to. This includes databases created by credential-selling cybercriminals using stealer malware.

This finding is good news for OpenAI. It means, in a way, that there is no active vulnerability on the company’s side of things. Nevertheless, it’s still bad news for OpenAI users, whose data is now part of a real leak.

This type of data leak, as mentioned, is sold on the dark web over and over again. Cybercriminals who buy these databases use them to gain access to victims’ devices, launch attacks, deploy and distribute malware, run fake ads, take over accounts, and steal identities to commit financial fraud.

KELA also found that while EmirKing’s post on Breach Forums was written in Russia, the wording of the text suggests the actor used a Russian translation tool. Therefore, it is likely that the culprit behind this criminal enterprise is not a native Russian speaker.

KELA confirmed the suspicions of cybersecurity experts, saying that the low activity and low engagement of EmirKing’s Breach Forum posts is an indication of the skill level of the threat actor, supported by the lack of connections with other cybercriminals.

Notably, EmirKing has deleted the Breach Forums post despite still being a member of the platform. The FBI shut down and seized Breach Forums, but the site moved to a different domain and continued operating.

More on the data leak sample analysis

The sample data that KELA analyzed included 30 compromised credentials linked to OpenAI services. All contained authentication details to auth0.openai.com.

“These credentials were cross-referenced with KELA’s data lake of compromised accounts obtained from infostealer malware, which contains more than a billion records, including over 4 million bots collected in 2024,” KELA researchers said.

It’s not just a few instances, either. All of the OpenAI credentials shared by EmirKing came from accounts compromised by stealer malware. Unfortunately, KELA believes that the entire 20 million OpenAI credentials are real and that the actor intends to sell them.

Deeper analysis showed that the compromised data samples come from 14 distinct sources. KELA classifies these into 2 categories. Private Data Leaks originate from paid subscription bots selling Telegram channels. Public Data Leaks, widely shared stolen credentials, often appear on public forums and dark web marketplaces, mostly on Telegram channels.

“Notably, the most prevalent source in the dataset was linked to over 118 million compromised credentials in KELA’s data lake,” KELA said.

KELA revealed that the stealers — Redline (8 occurrences), RisePro (5 occurrences), StealC (4 occurrences), Lumma (5 occurrences), and Vidar (4 occurrences) — were the primary malware families used to steal data from OpenAI users. These stealers stole the credentials between October 12, 2023, and July 28, 2024.

This is more bad news for OpenAI. The fact that the data and passwords of an OpenAI account can be retrieved illegally from user devices via stealers represents a risk and a vulnerability. OpenAI now faces the challenge of patching this weakness.

Stealers often extract sensitive data like usernames and passwords from browsers. Also known as infostealers, this type of malware can also extract information like passwords from other locations besides browsers.

Once an infostealer breaches a device, it can infiltrate systems and steal sensitive data, such as login credentials, financial details, personal details, and system and network information. All this is done without the victim knowing it.

Infostealers extract information from web browsers, password managers, and even clipboard data. The type and scale of the data can vary from one infostealer component to another.

I am an OpenAI user. What should I do?

If EmirKing had leaked all 20 million data lines from OpenAI users, you would have a good chance of knowing if you are affected. But at this time, there is really no way of checking if your credentials were leaked. The exception would be if you were to contact an organization like KELA.

This data leak is formed by other data leak lists from the past. Accordingly, we recommend that you check Have I Been Pwned, just to be safe.

Have I Been Pwned allows users to rapidly check if they were part of a data leak. Simply enter an email address and click Search. This organization works hard to create a full database of all global breaches, removing duplicates and verifying the veracity of claims.

We also suggest that you change your OpenAI password, enable multi-factor authentication, use passkeys or biometrics, and delete automatic sign-in information for OpenAI from your browser.

Yes, you will have to type your email and password each time. However, it’s better to be safe than sorry.

Additionally, if you notice anything suspicious, check OpenAI’s official support pages. Follow their recommended steps and contact them if needed. Monitor your accounts and your financial statements, and make any necessary reports or password changes if deemed necessary.

Final thoughts

The OpenAI 20-million-email data leak incident proved to be both false and authentic. This is not all that uncommon on Breach Forums, where black-hat hackers promote their criminal activities by adding exaggerated claims to draw in a big crowd of possible customers.

Beyond that, this incident teaches us that AI credentials could very well be the next big thing in cybercrime. As millions of users now have accounts on AI platforms and their personal data is stored on the platforms’ servers, it is only a matter of time before threat actors begin to target this industry to steal credentials and personal data.

This news also proves that stealer malware can effectively exfiltrate credentials from AI companies, even when they provide state-of-the-art technologies. The AI industry must face the cybersecurity threats coming their way and patch things up fast — before it’s too late.