Apple’s new iOS 18, released at WWDC 2024, was met with positive first reactions by the international tech press and iPhone users. The new operating system, supercharged with AI, is Apple’s counter-offensive move to gain ground in the AI race.

Apple said that its AI, dubbed Apple Intelligence, is developed with privacy at its core. The AI runs on-device and in the new Private Cloud Compute. However, with the integration of any GenAI tool, the concern of privacy and shadow apps (the use of non-authorized apps) in workplaces gives way to privacy concerns.

Apple’s AI integration is an “unacceptable security violation,” Elon Musk says

On June 10, the very same day as WWDC, Elon Musk posted a series of tweets on X (formerly Twitter) with harsh words for Apple. Musk claimed, “Apple has no clue what’s actually going on once they hand your data over to OpenAI.”

After threatening that if “Apple integrated OpenAI at the OS level,” he would ban all Apple devices at his companies — Tesla, SpaceX, Neuralink, X, and others. Musk added that his visitors would have to check their Apple devices at the door to be stored in a Faraday cage.

A Faraday cage is an extreme hardware-level protection device that creates an isolated enclosure and blocks technology and electromagnetic fields.

As the international press echoed Musk’s disruptive words, Moonlock reached out to experts to get their views.

Jerrod Long, a software development expert working to integrate technologies such as AI, Web 3.0, and blockchain as the SVP and Director of Digital Innovation at Doe-Anderson, a brand build-up company, told Moonlock that Musk’s concerns are valid “to some extent.”

“By integrating AI at the OS level, it means that AI functionalities would be deeply embedded within the core software of Apple devices, potentially having access to a wide range of system resources, data, and user interactions,” Long said.

AI functionalities would be deeply embedded within the core software of Apple devices, potentially having access to a wide range of system resources, data, and user interactions.

Jerrod Long, Director of Digital Innovation at Doe-Anderson

Long explained that AI integration could drive several risks, such as:

- Access to sensitive data

- Control and privacy issues

- Vulnerability to exploits

- Data sharing and usage threats

The potential dangers of access to user data

AI models require data to generate results, and in the case of iPhone users, this means having access to users’ personal data.

“If AI functionalities are integrated at the OS level, they could potentially have access to sensitive data, including personal information, business data, and confidential communications,” Long said.

“Such deep integration could also mean that the AI system has significant control over device functionalities, raising concerns about privacy and data security. Additionally, ChatGPT vulnerabilities could be exploited by bad actors, representing a problem for Apple and iPhone users.”

“There could be concerns about how data is shared and used by the AI system,” Long added, “especially if it involves cloud-based processing or external data transfers.”

A lack of clarity and transparency: How exactly does Apple Intelligence work?

The main problem with Apple´s integration of ChatGPT is the lack of clarity and information regarding how this new AI works and how it integrates models. For example, we know that the AI that runs on the iOS 18 is Apple Intelligence. However, it is unclear how much of ChatGPT’s features or code are part of Apple Intelligence.

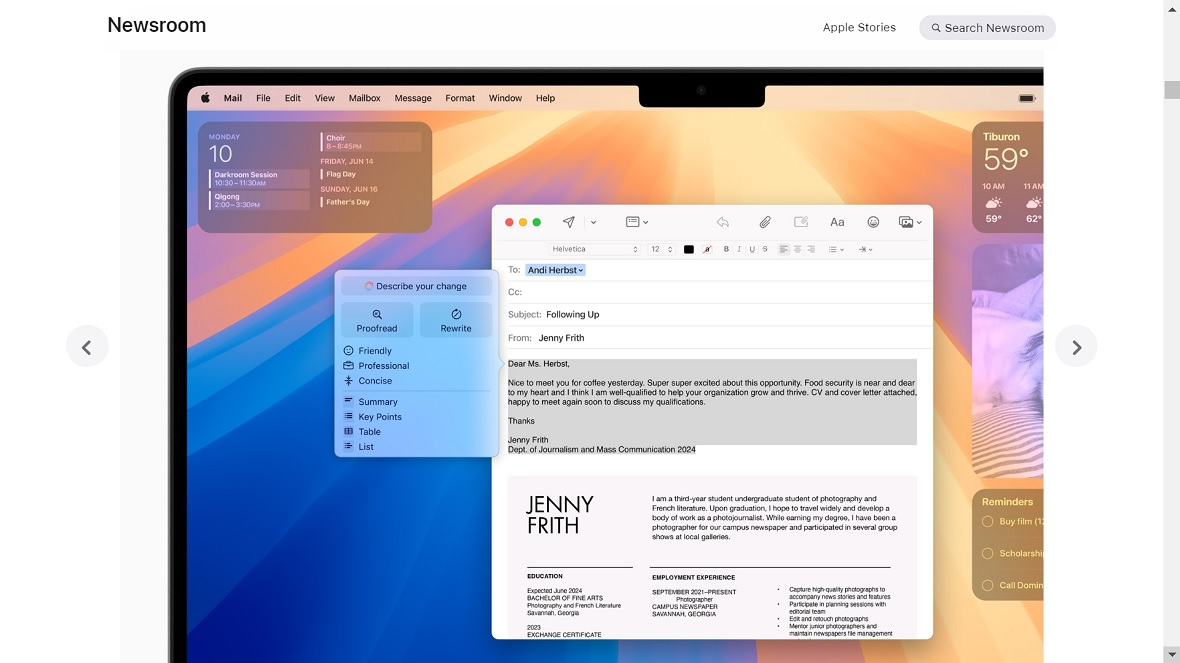

Apple has mentioned that Apple Intelligence integrates several models and that more models could be integrated as well in the near future. This means Apple Intelligence is a base foundational structure for AI integrations.

Running AI on your iPhone and on Apple’s new cloud

Apple explains that Apple Intelligence works on the device at the hardware level. This means that the data it processes (user data included) never leaves the device except on some occasions. Those occasions are when Apple Intelligence’s computing on-device capabilities do not have the power to compute the response.

When users ask Apple Intelligence to do something that exceeds its on-device processing capabilities, Apple Intelligence will automatically send the request to the new Apple cloud, where AI servers handle the request.

According to the company, this new cloud, which Apple calls Private Cloud Compute, has the strictest security and privacy controls. Apple also asserts that no one, not even Apple itself, can view the data that a user sends to Private Cloud Compute.

The on-device AI processing approach is believed to be one of the safest ways to run AI systems, but integrating a third party into an OS is not without risks. ChatGPT is deeply woven into writing apps, Siri, and many other new features at the OS level.

Sending data to the cloud and back also carries certain risks, ranging from unauthorized access to data interception, cloud breaches, and even malicious AI-sensitive data leaks.

The third-party problem is at the core of the controversy

Julien Salinas, CEO at NLP Cloud, an OpenAI alternative API focused on data privacy, told Moonlock that the main problem comes from the fact that Apple will have to send their users’ data to a third party.

“Elon Musk believes that Apple is not capable of ensuring that OpenAI will protect users’ security and privacy,” Salinas said.

Salinas explained that Apple has emphasized that they will not only use the OpenAI external API but also their own AI models in some cases, which run on the devices themselves. The tech does not send information over the internet to perform tasks like generating images and predicting text.

Additionally, when a device connects to one of Apple’s AI servers (Private Cloud Compute), the connection is encrypted, and the server deletes any user data after the task is finished.

“Even if Apple tries to be reassuring, I agree 100% with Elon Musk: sending Apple’s user data to another company like OpenAI is a data privacy and a security concern,” Salinas said.

Salinas highlighted previous OpenAI data exposure incidents and said the company is still young in the cybersecurity game.

Should organizations trust Apple with their data?

Alan Brown at Next DLP, a data protection company, told Moonlock that comparing Apple’s approach to other vendors, the company has taken extraordinary steps to improve the privacy of user data when using machine learning technology.

“However, using Apple’s cloud service still requires a degree of trust in the vendor’s promises,” Brown said. “One thing widely known about LLMs is that they require vast quantities of information to process as training data.”

Brown explained that with the integration of ChatGPT (and promises of other services being integrated over time), the accidental release of personal or organizational data to one of these services is still possible.

“It’s unclear what organizational controls Apple will provide should an organization such as an electric car manufacturer, microblogging service, or commercial space launch company wish to avoid leaking sensitive data to OpenAI or others,” Brown said, referring to Elon Musk’s companies.

The benefits of healthy skepticism

Brown warned that organizations should maintain a degree of healthy skepticism regarding any information transfer to a third party. “Any remote service handling sensitive data can have compliance or legal requirements depending on your industry or location,” the data protection expert said.

According to Brown, security tools can provide visibility of sensitive data flow, helping organizations and individuals determine by themselves if the risks of integration are valid.

“It remains to be seen how Apple will handle this with their own models over time, given the highly competitive landscape,” Brown said.

Clyde Williamson, Senior Product Security Architect at Protegrity, a company building data protection solutions, also shared with Moonlock reservations about Apple’s integration.

“OpenAI’s ChatGPT is hosted by a third party, OpenAI — which is hosted by Microsoft and now potentially on servers owned by Apple,” Williamson said.

“This means that everything we say to ChatGPT goes to these servers. Privacy data, health information, business content discussed with ChatGPT, etc., could all be stored and exposed on systems under someone else’s control.”

Williamson said he agrees with the basic premise of Musk’s concerns. “A Tesla employee using an Apple phone may use that phone to ask ChatGPT some work-related questions and accidentally leak sensitive business information outside of the corporation,” Williamson said.

Is ChatGPT integration a serious risk for Apple users?

Moonlock asked Williamson how big the threat for Apple users was. His answer? It’s not a grand.

Williamson explained that countless companies use third-party integrations such as Google Docs, Google Drive, OneDrive, Salesforce, or other SaaS apps that store sensitive information on third-party servers.

“What is really the bigger risk, something someone said to GPT in a chat, or the new architecture document you have stored in OneDrive that lays out the new design of your product?” Williamson asked. “Is it more dangerous for it to leak that you talked to ChatGPT about a customer, or for all of your customer contacts to be compromised in Salesforce?”

Williamson added that the Tesla, along with almost every modern car manufacturer, is also recording massive amounts of data about users and sending it back to their “third-party” servers.

“Every smartphone, car, AI device, mobile device, IoT device, etc., is constantly sending data about us,” Williamson continued. “Every social media platform is harvesting our behaviors and responses to manipulate what we experience on their platform.”

Would Apple integrating ChatGPT be a major shift or some new existential crisis? Absolutely not,” Williamson asked and answered. “It’s just the latest service that offers features at the expense of your privacy.”

What should iPhone users do?

Apple’s latest iPhones boast impressive new AI features powered by ChatGPT, but privacy concerns loom. Experts worry about user privacy and data being exposed to third parties. There’s some truth to this — AI systems like ChatGPT need data to function, and user data could be involved. Additionally, vulnerabilities in ChatGPT could be exploited.

However, Apple has implemented security measures to address these concerns. The company emphasizes processing data directly on iPhones and promises robust security in their private cloud storage. This approach minimizes the amount of data leaving users’ devices.

So, should iPhone users be worried? Experts recommend a balanced approach. Weigh the potential risks against the benefits of the new AI features. Stay informed about updates and security measures. Finally, practice good security habits and be mindful of the information you share with AI assistants. By being aware of the potential risks and taking precautions, iPhone users can still benefit from the new AI features.

As the experts said, AI is a new technology that offers users numerous benefits. But, like any innovation, it also carries hidden costs in the form of potential risks.

This is an independent publication, and it has not been authorized, sponsored, or otherwise approved by Apple Inc. iPhone and Apple Intelligence are trademarks of Apple Inc.