Users around the world have shifted in big numbers from using search engine sites like Google to using AI platforms instead. With this shift, the core business model that has kept the internet running for decades (ads and sponsored content) has changed completely.

Following global user traffic, companies now want to run ads on AI platforms, and most AI companies, from Google to xAI, Amazon, Meta, and OpenAI, either allow ads in some form or plan to allow them soon. However, and unfortunately, it’s not just legitimate businesses that chase global online traffic trends and invest in digital ads.

Protect your Mac from ChatGPT-spread malware

The question is, will malvertising, a technique in which cybercriminals bypass ad platform security guidelines to run ads that direct users to malicious sites, also move away from search engine giants and onto AI platforms? We turned to leading security experts to answer this and other AI security questions.

Ads will start rolling out on ChatGPT, but is the platform ready to combat malvertising?

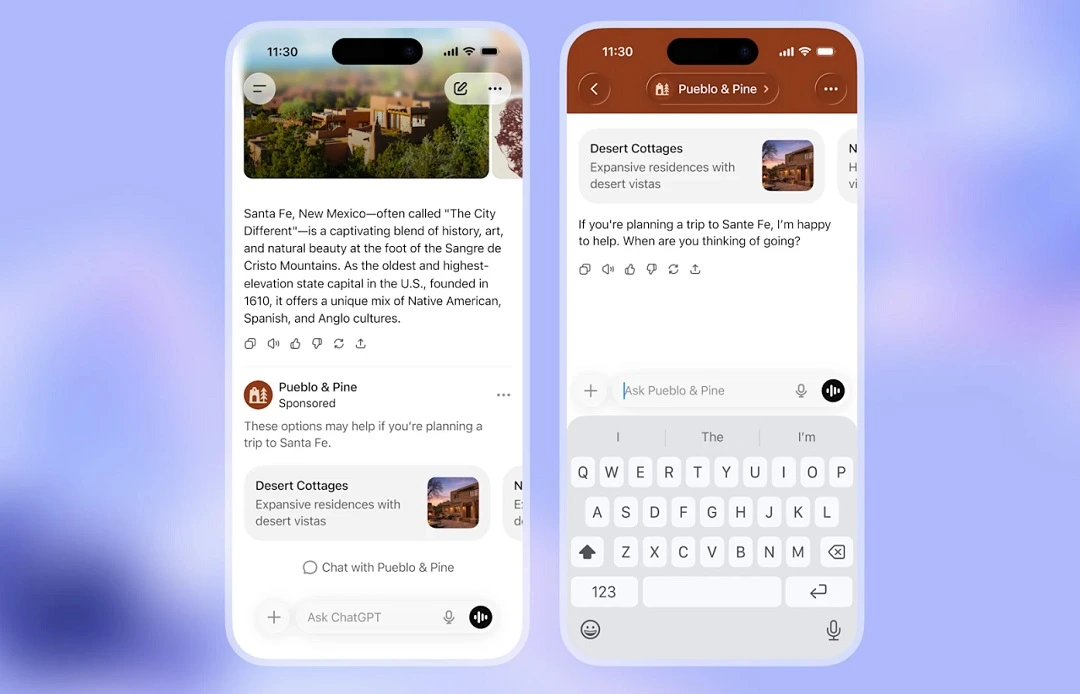

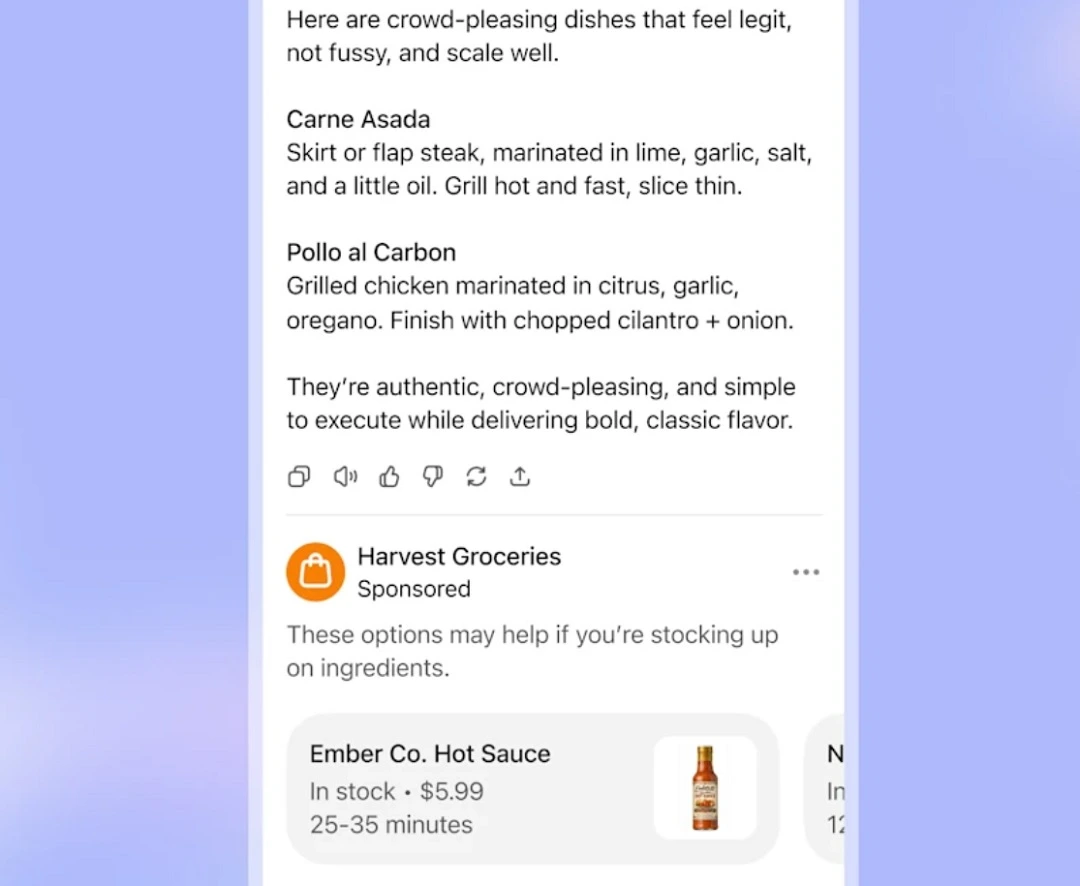

Recently, OpenAI announced the start of tests for ads set to run on ChatGPT.

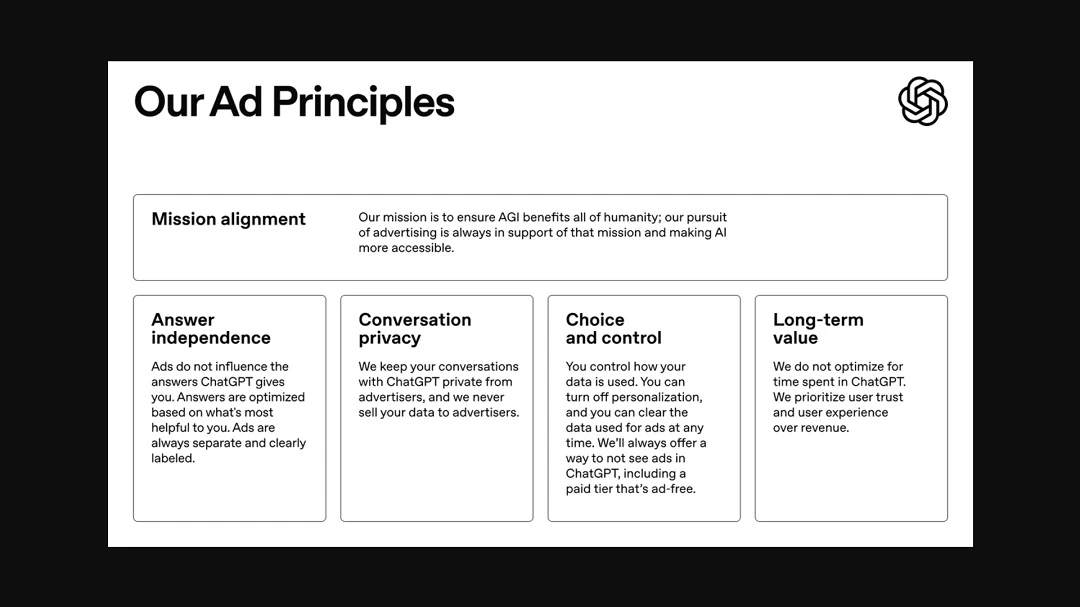

The company said they would be sharing “principles” on how they will approach ads, assuring they would put transparency first.

While OpenAI addressed important issues like how the platform will use the data you type into the chatbot to “personalize your ad experience” and that paid tiers will not have ads, it did not address the security checks they will use to ensure that cybercriminals won’t run ads on ChatGPT.

In fact, the company’s advertising principles do not address cybersecurity at all.

What would AI malvertising even look like?

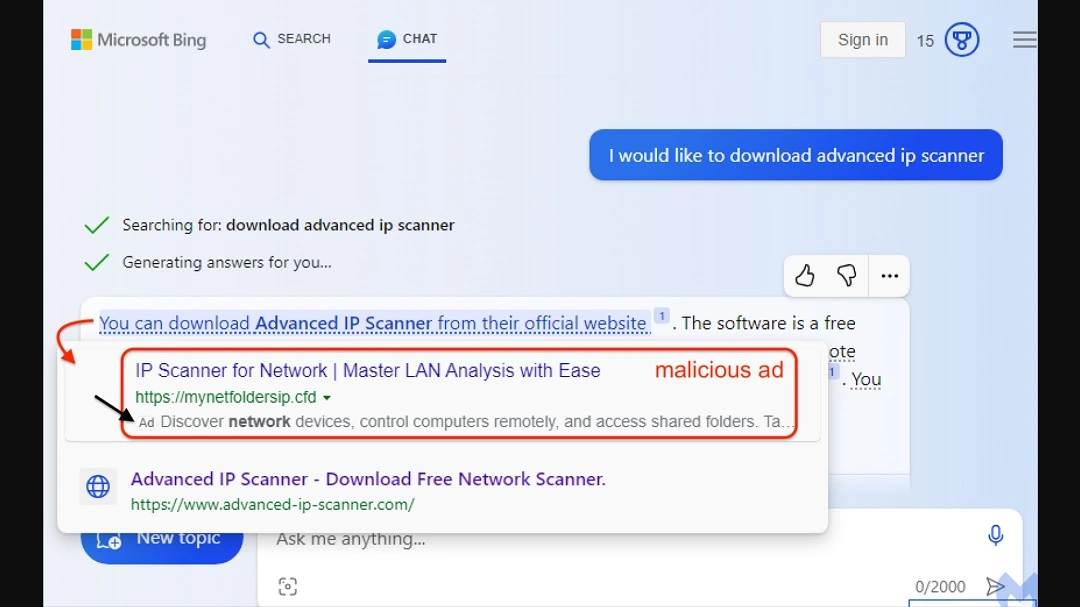

In February 2023, Malwarebytes reported that ads on Microsoft’s AI-assisted search engine, Bing Chat, powered at the time by OpenAI’s ChatGPT, were being used to spread malware. While this threat campaign is definitely a clear case of AI malvertising, the techniques did not become a popular method to spread malware.

Since 2023, however, AI platforms and AI chatbot users have grown exponentially, making the entire sector, and AI malvertising in particular, rather attractive for threat actors.

“AI platforms are absolutely on the radar for cybercriminals,” Trevor Horwitz, CISO and Founder at TrustNet, told Moonlock.

These tools are trusted, widely used, and conversational by design, which lowers the user’s guard, Horwitz said.

“If a malicious ad or prompt is presented inside an AI chat, especially if it looks like a legitimate recommendation, users are more likely to click,” he added.

If a malicious ad or prompt is presented inside an AI chat, especially if it looks like a legitimate recommendation, users are more likely to click.

Trevor Horwitz, CISO and Founder at TrustNet

“That’s what makes this threat different,” Horwitz said. “It feels like advice, not an ad, and if the attacker uses AI to tailor the language and timing, it becomes even harder to spot.”

What vulnerabilities could cybercriminals exploit to breach AI malvertising guardrails?

Given the level of trust that most users have in ChatGPT and similar AI bots, if malvertising manages to breach OpenAI’s ad system and platform, the spread of malware and other digital cybersecurity threats could be significant.

“Malvertising rarely looks overtly malicious to users,” Dane Sherrets, Staff Innovation Architect at HackerOne, told Moonlock.

Malvertising typically appears as a relevant promotion or recommended resource inside a trusted environment, with the risk emerging after the click, when users are often redirected through multiple pages to a new destination, said Sherrets.

“As platforms introduce dynamic content and monetization layers, the core challenge becomes managing trust boundaries and treating anything rendered client-side as part of the attack surface,” Sherrets added.

HackerOne’s 2025 Hacker-Powered Security Report found that misconfiguration vulnerabilities increased 29% in the past year. Misconfigurations, closely tied to client-side exposure and third-party integrations, along with script injection and browser-based manipulation through third-party dependencies, are conditions that attackers commonly rely on in malvertising campaigns, even when ads or embedded content appear benign at first glance.

The report also found that in 2025, 13% of organizations reported an AI-related security incident. Of those, 97% lacked proper AI access controls.

Additionally, HackerOne has seen a 210% increase in valid AI-related vulnerability reports. 65% were AI security issues such as prompt injection, model manipulation, or exposed endpoints. 35% fell into the AI safety category, including ethical misuse or output integrity.

Keywords and user intent driving AI ad personalization could serve cybercriminals

While full transparency is yet to come from OpenAI’s ad security team (assuming such a thing exists), the way users are targeted and what information the platform uses to serve ads to its users can influence malvertising campaigns.

Clyde Williamson, Senior Product Security Architect at Protegrity, walked us through how cybercriminals could exploit keywords or user intent to spread malicious ads on ChatGPT.

“In a ChatGPT world, we could imagine ‘Help me write this code…’ wouldn’t be shared, but [Concept: Vibe Coding] might trigger an ad for a no-code app that makes your vibe coding even more abstract,” said Williamson.

“This would be a prime opportunity for malvertising, as a no-code app could be adding all kinds of malicious code under the hood,” he added.

Once an attacker knows that a specific ad will appear for a specific set of keywords, a prompt-injection attack, rogue tool, or MCP service might manipulate your prompt to ensure that those ads appear, he said.

“Is that likely? We don’t know, because we don’t know what OpenAI will implement or how it will monetize its users,” said Williamson.

New vulnerabilities and AI exploits are likely to emerge as cybercriminals adapt. “Whatever clever measures OpenAI uses to make its ads generate real revenue will be targets for exploitation,” Williamson told Moonlock. “That’s how cybercriminals think.”

“Most interestingly, OpenAI is aiming ads at Free and Go (the $8 tier),” Williamson added. “These represent the largest pools of users and may include a less technically savvy user base.”

“This is prime hunting territory for cybercriminals: Grandmas using ChatGPT,” said Williamson.

How to keep safe from AI malvertising

While companies like Google, Meta, and Microsoft do offer public documents on how ad verification systems work, we know due to countless reports that these platforms are often abused and bypassed by malvertising criminals. Nevertheless, there is still plenty you can do to stay safe from AI malvertising.

Do not download software directly from AI responses

“The first rule is simple: don’t download software directly from links in AI responses,” Horwitz from TrustNet told us. This is good advice, and if you make it your golden standard, it improves your security significantly.

“Use endpoint protection that’s built for Mac, keep systems updated, and treat AI-generated suggestions the same way you’d treat advice from a stranger,” said Horwitz. “Helpful, maybe, but not always right.”

Keep up with cybersecurity news

The cybersecurity scene moves fast, and cybercriminals and threat actors move even faster.

The volume and scale of the global digital threat landscape will spill over in endless, ever-evolving ways. By reading your favorite or most trusted cybersecurity media regularly, you can get the inside story on how threats evolve, learn more about technology, and stay one step ahead of attackers.

Install a powerful antivirus like Moonlock

Mac’s built-in security tools can and are breached every day by cybercriminals. Platforms like ChatGPT, along with most popular big tech brands, are abused and impersonated over and over. And malvertising efforts show no sign of slowing down.

To add a layer of security to your Mac, download and try out the Moonlock app. It will watch your six and check for suspicious activity on every file you interact with. This way, even when something slips by you, which happens to everyone from time to time, the app will flag it, notify you, and move the threat to Quarantine.

Moonlock also features a constantly updated malware database and a VPN for safe browsing. Plus, it offers tips on how to strengthen your Mac security settings and configurations and provides information on how to stay safe and maintain better digital habits that put an end to any type of social engineering trick a cybercriminal can throw at you. You can give it a test drive for free with a 7-day trial.

Final thoughts

It is not a matter of if but when malvertising hits platforms like ChatGPT, Grok, Claude, Gemini, and other AI chatbots. The good news is that when the waves of AI malvertising finally break, you will have the tools to deal with these new and very real threats.

This is an independent publication, and it has not been authorized, sponsored, or otherwise approved by Apple Inc. Mac and iOS are trademarks of Apple Inc.