For the past few months, Moonlock has been closely following the development of a new trend of AI misuse and abuse. But, unlike other cyberattacks, such as phishing or infostealers, which can be mitigated with the right security tools and best practices, AI-powered nude generators and “undress apps” are extremely difficult to stop.

In this report, we talk to experts and lawyers, as new investigations reveal that millions of users around the world are using deepfake undress bots.

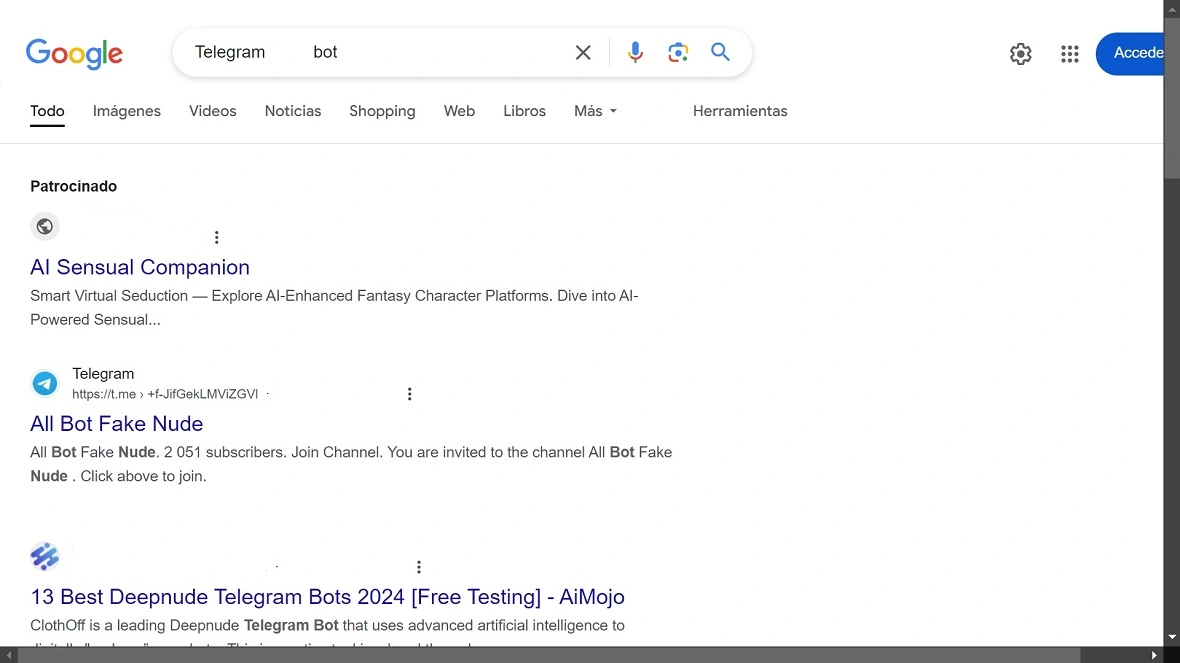

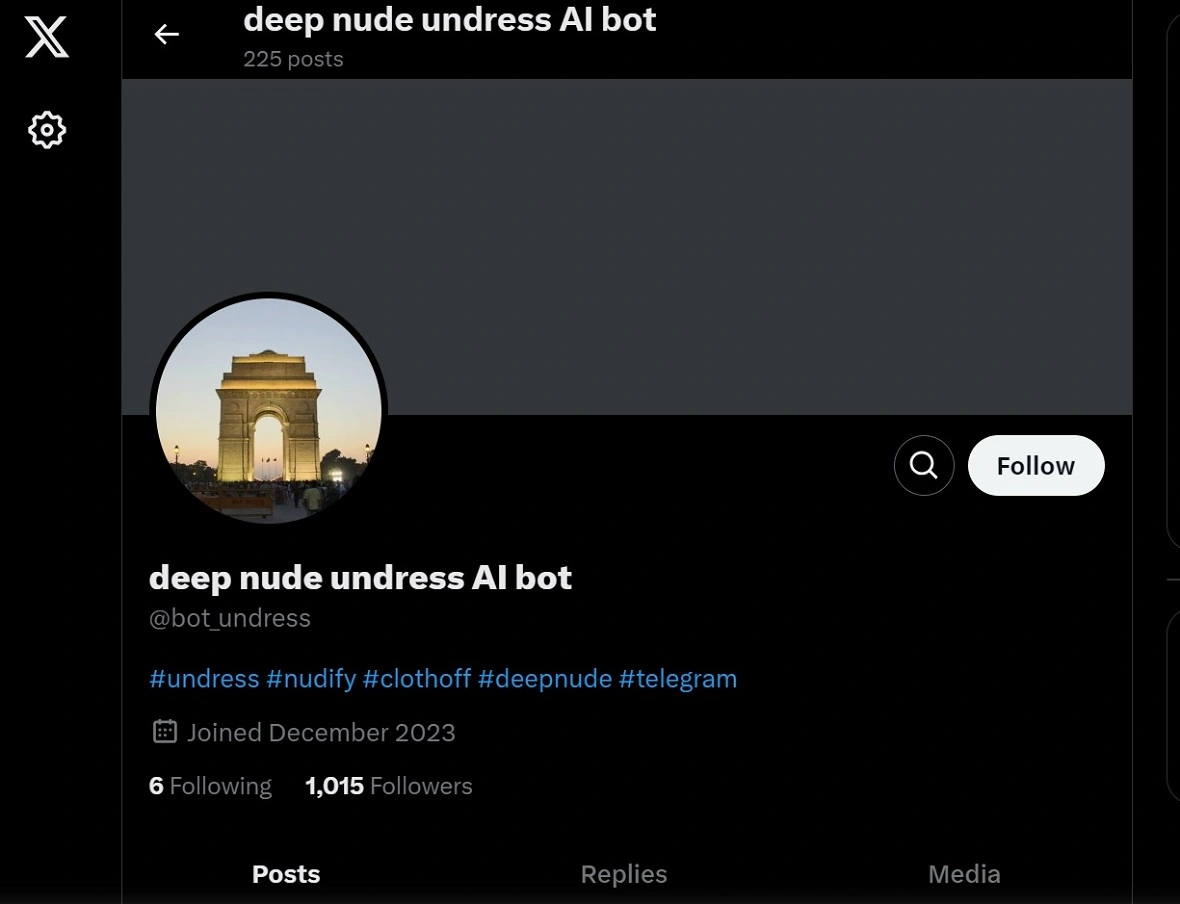

AI “Nudify” bots on Telegram used by millions of people

On October 10, a new Wired investigation revealed that bots that “remove clothes” from images are running rampant on Telegram.

These bots allow any user to generate nonconsensual deepfake nudity images. Wired reviewed Telegram communities and found at least 50 bots on the platform that generate and distribute explicit nonconsensual AI-generated content.

The simplicity of how these bots work is scary. A user simply uploads a photograph of a person (any photograph), and the bot “undresses” the victim. Some bots can even insert the victim into fake sexual acts. Wired concluded that these 50 bots have at least 4 million monthly users.

C.L. Mike Schmidt, an attorney from Schmidt & Clark LLP, told Moonlock that victims of these deepfake nude generators have legal options, but they’re still somewhat limited in certain jurisdictions.

“In many places, distributing nonconsensual explicit images, often referred to as ‘revenge porn,’ is illegal, and deepfakes fall under that umbrella,” Schmidt said.

In many places, distributing nonconsensual explicit images, often referred to as ‘revenge porn,’ is illegal, and deepfakes fall under that umbrella.

C.L. Mike Schmidt, Attorney at Schmidt & Clark LLP

Schmidt explained that victims can file lawsuits for defamation, invasion of privacy, or emotional distress, depending on the laws in their region. “However, the challenge usually lies in tracking down the perpetrators, especially when these bots are operated anonymously or from overseas,” Schmidt said.

Deepfake nudity is out of control

Unfortunately, from sextortion to cyberbullying, the misuse of generative AI often targets children and teens. Naturally, parents are distressed — and rightfully worried.

Such images are most commonly used to bully classmates or as “revenge” porn, creating deep emotional harm to under-aged victims, their family, and friends. However, other incidents are cases of “online enticement.”

Online enticement is when an individual communicates with someone believed to be a child via the internet with the intent to commit a sexual offense or abduction.

Between 2021 and 2023, the number of online enticement reports increased by more than 300%. In 2023, the CyberTipline received more than 186,800 reports of online enticement. In 2024, through October 5, NCMEC has received more than 456,000 reports of online enticement.

Laws lag behind technological innovations

Irina Tsukerman, a national security and human rights lawyer, told Moonlock that, as with any new technology, the laws and regulations are lagging behind.

Tuskerman said that in the United States, deepfake federal laws have been stalled due to risks of violating the First Amendment. However, federal and state laws exist that can apply, depending on each case. Tsukerman explained that there are efforts to place the new and creative abuses of technologies within the existing tort and criminal law frameworks.

“Some examples include the use of the Interstate Anti-Stalking Punishment and Prevention Act, which makes it a felony to use any ‘interactive computer service or electronic communication service, to engage in a course of conduct that causes, attempts to cause, or would reasonably be expected to cause substantial emotional distress to a person,” Tsukerman said.

Additionally, Tsukerman said that nonconsensual nudes would likely fall under the general rubric of “deepfaked nonconsensual pornography” and be treated the same way.

The movement to criminalize nonconsensual deepfakes

While using the Interstate Anti-Stalking Act in cases of deepfakes could be a bit of a stretch, it has already been applied to other types of nonconsensual pornography distribution, given that online dissemination and the reach of such images arguably crosses state lines.

To date, there is no specific federal law that criminalizes deepfake nonconsensual pornography (DNCP). As a result, actors are either prosecuted under indirectly related federal laws or state laws.

More than half of American states have enacted or are exploring laws designed to criminalize the creation and distribution of deepfakes, but these laws are only related to election-related content, not nudity.

In the United Kingdom, there is currently an effort to introduce a specific provision that would outlaw DNCP (and related deepfaked nonconsensual imagery). However, Tsukerman warned that in the US, the issue is still in very early stages of debate.

Where does this leave those looking to pursue legal action?

Tsukerman listed several laws that can be used to prosecute criminal offenses related to deepfake nudity, including the Copyrights Act of 1976, and criminal provisions of the Computer Fraud and Abuse Act, which prohibits computer hacking and the distribution of information obtained from it. This could serve as a remedy if the images are obtained via hacking.

Additionally, the Video Voyeurism Prevention Act of 2004 punishes “the intent to capture an image of a private area of an individual without their consent, and knowingly does so under circumstances in which the individual has a reasonable expectation of privacy.”

The only successfully enacted federal legislation related to deepfakes is the National Defense Authorization Act for Fiscal Year 2020. However, this law did not establish criminal liability.

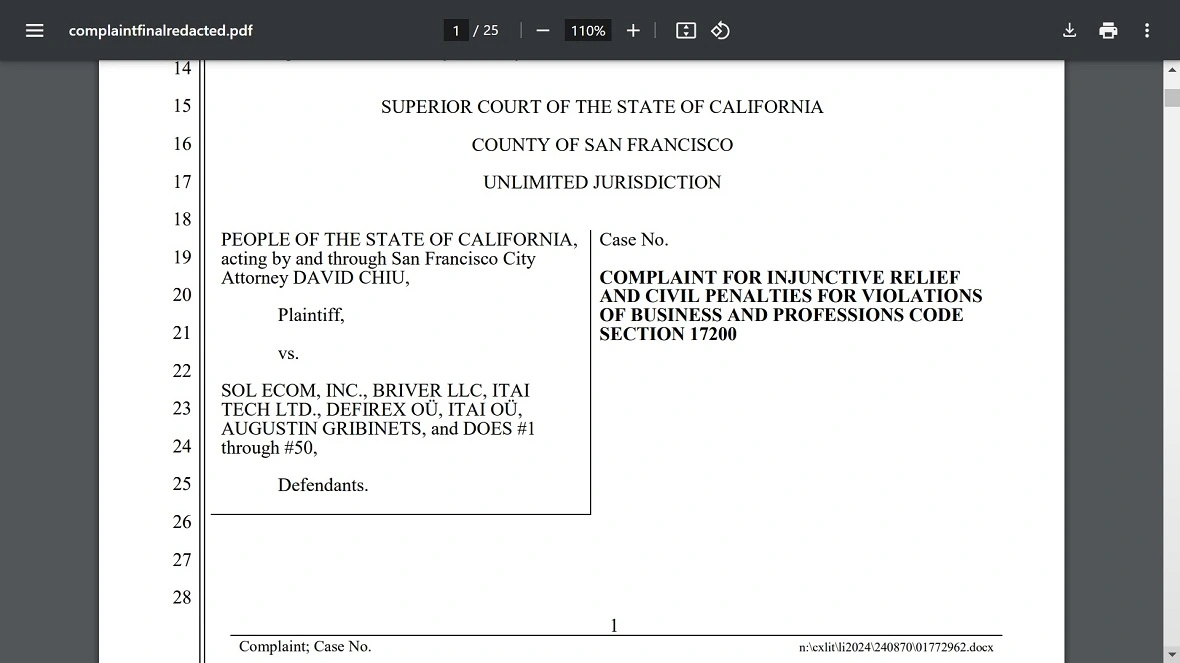

These aforementioned legal gaps have forced those presenting legal cases against deepfake nudity to get creative. For example, the State of California, acting through San Francisco City Attorney David Chiu, is suing a wide range of companies — and up to 50 unknown owners of deepfake nude generator sites — for injunction relief and civil penalties for violations of the Business and Professions Code, Section 17200.

Platforms, threat actors, AI-creators, or governments: Where does the buck stop?

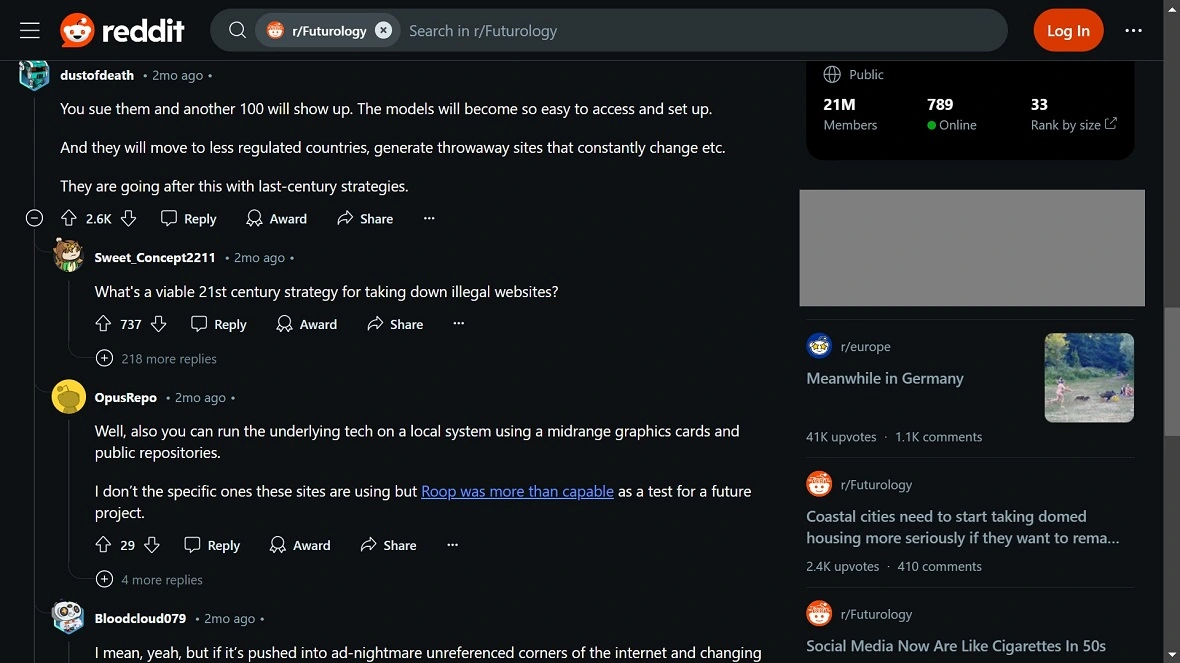

Throughout the past several months, a familiar question has reemerged in our society. Who is responsible for content hosted on social media channels or platforms? The government, tech companies, those who abuse technology, or bad actors?

Tsukerman explained that tech companies are still safeguarded by Section 230 of the Communications Decency Act, which grants blanket immunity to platforms for user-generated content.

“Section 230 has shielded Internet companies from liability in defamation, negligence, IIED, and privacy claims,” Tuskerman said.

“The last remaining tortious remedy is to go after the distributors,” Tsukerman concluded and cited the time required, the emotional stress, and the costly processes of legal actions for victims.

Tsukerman called for task forces to be integrated into platforms to monitor the misuse of AI, an endeavor that will require the help of lawyers, ethicists, psychologists, and law enforcement, not to mention international cooperation.

Tsukerman added that parents should not upload images of their children to the internet, especially if they are minors. “Do not upload their photos and videos,” said Tsukerman.

The need for stricter regulations

Schmidt from Schmidt & Clark LLP told Moonlock that he’d argue that platforms and governments alike have a role to play.

“Platforms like Telegram should absolutely be held responsible for hosting these malicious bots,” Schmidt said. “If they can control other types of content, they should be able to detect and remove bots that are generating harmful material.”

“That said, law enforcement and governments need to step up, too,” Schmidt added. “They should implement stricter regulations and collaborate internationally to create frameworks that make it harder for these tools to operate unchecked.”

“There are other remedies that could be used to assist with putting a stop to it, such as the use of detection technologies to disrupt the dissemination of such imagery,” Tsukerman explained. “Another option is to create incentives for online companies to use these technologies to combat the creation and dissemination of such images.”

What can parents do?

Nicole Prause, senior statistician in addictions research at UCLA, studies the effects of pornography and is in the middle of debates about the effects of nudify apps. Besides law enforcement coordination with apps and clear and transparent policies and features that help identify and shut down the creators of these bots, Prause said parent-child communication is critical.

Prause said parents should become familiar with professional porn literacy programs, which have been shown by research to be effective.

“These programs include basic information for youth about the risks in sharing nude images of themselves or others, and to contact trusted adults when they witness illegal behaviors,” Prause said.

Prause explained that the response of youth in these situations has been demonstrated to largely reflect the distress of the parent.

“This does not mean that a parent should downplay the violation of these images for their youth, but to take time to manage any initial emotional reactions that could distress their child further,” Prause added.

“Reassuring youth that this was a criminal act by someone else, and not their fault, may be helpful as you navigate reporting to law enforcement.”

Final thoughts

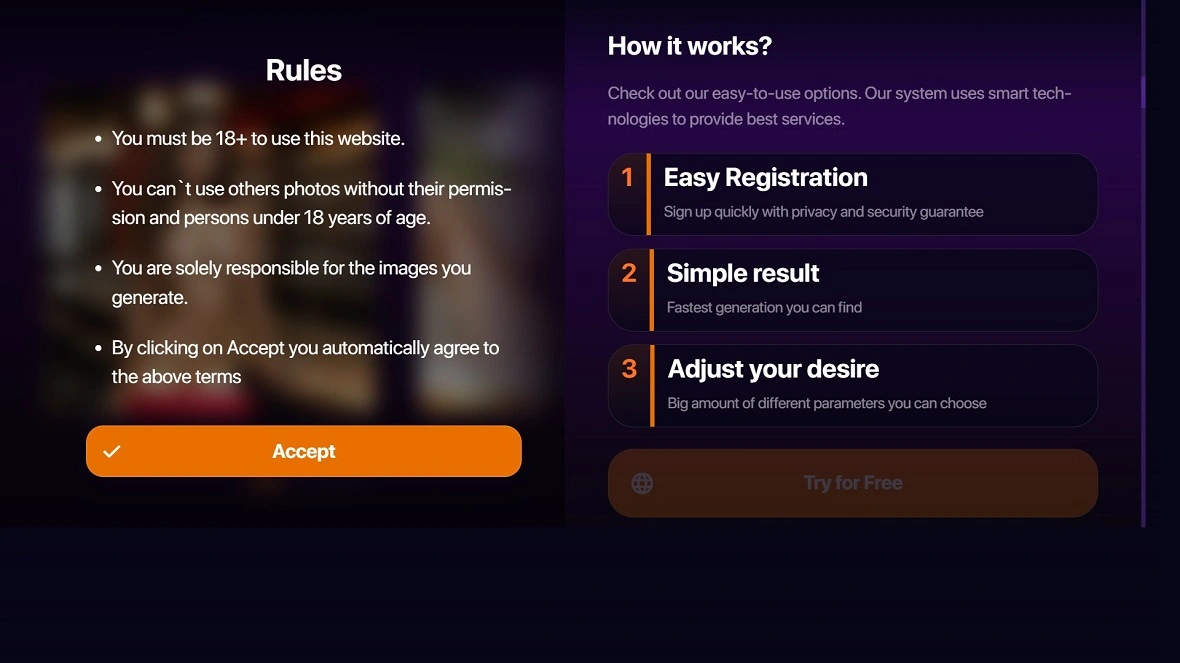

Deepfake nude generators, which have been linked to cybercriminal gangs and malware distributors, are widespread online.

These apps and services cause incredible damage to victims, yet they can be found and accessed by anyone through a simple search on any browser or any social media app. The amount of Telegram nude bots and online nude apps Moonlock found online is shocking, staggering, and despicable.

It is evident that at the time, not only are new laws needed to combat this often transnational, criminal, malicious behavior, but that social media platforms and even search engines are doing little to curb the growth of this disgusting trend.

These apps represent a grave danger, operate in grey legal areas, abuse vulnerable populations, including minors, children, and teens, and have led to victims committing suicide.

From a cybersecurity perspective, shutting down deepfake nude generators or “undress apps” is a nightmare. There is no vulnerability to patch, no malware to flag or remove, and no specific vector of attack. The worst-case scenario is already here: Deepfake nude bots are everywhere.

Governments, law enforcement, child professionals, social media companies, and lawyers need to team up to put an end to the trend of AI-nude apps.