Chances are that if you worked in accounting or finance and received a call from your boss asking you to make a transfer, you most likely would do it, especially if he asked you face to face.

That is exactly what happened to a financial officer working for a multinational firm in Hong Kong. When the firm’s UK-based chief financial officer asked him to do a transfer, he complied and sent a total of $200 million Hong Kong dollars — about $25.6 million US dollars. Unfortunately, it all turned out to be a scam.

Hong Kong Free Press reported on February 5 that, in total, 15 wire transfers were made. During a press conference, Hong Kong police said this was one of the first cases of its kind in the city. What makes it so unique? Deepfake AI.

How the deepfake heist happened

While the name of the multinational corporation that lost 26 million dollars to the sophisticated scam was not made public, police have released enough details on the case to give us a clear idea of how the heist went down.

CNN reported that the Hong Kong-based financial worker and victim initially had doubts. He received an email that called for “a secret transaction” and thought it could be a phishing attack.

But at the press conference, Acting Senior Superintendent Baron Chan Shun-ching explained that the worker was convinced when the fake CFO from the UK asked the worker to attend a video call with several other members of staff, some of whom the victim already knew. The video call must have gone well for the hackers, as the victim began making the big-money transfers after that.

“(In the) multi-person video conference, it turns out that everyone [he saw] was fake,” the Acting Senior Superintendent said.

Hong Kong police said that the investigation is still ongoing, and no arrests have been made. However, they did announce 6 arrests connected to similar scams.

Multi-person deepfakes, the evolution of the criminal trend

As Moonlock recently reported, deepfakes are among the top 10 macOS security trends to look out for in 2024.

While one-on-one deepfake video/audio attacks are becoming very common, this particular case involved digitally impersonating several people. It makes this crime particularly interesting from a cybersecurity point of view.

Hong Kong police said that despite the level of sophistication of the attack, the deepfake videos were prerecorded and did not involve dialogue or interaction with the victim. However, the technology to create live deepfakes that can respond to questions does exist.

It doesn’t take much raw data to create convincing deepfakes. For the Hong Kong 26-million dollar scam, the criminals got everything they needed from YouTube.

“Scammers found publicly available video and audio of the impersonation targets via YouTube, then used deepfake technology to emulate their voices… to lure the victim to follow their instructions,” the Acting Senior Superintendent said.

Keeping up with deepfake AI threats

Hong Kong Free Press reported that law enforcement agencies are pressured to keep up with generative AI, and this statement could not be more true.

The rise of generative AI — and its potential to replicate, create, and develop operations that involve realistic artificially created images, videos, and audio — is being leveraged by cybercriminals. Using AI deepfakes, they are taking phishing, fraud, biometric hacks, and identity thefts to the next level.

To create such deepfakes, these cybercriminals aren’t using the generative AI models and services found online. They are turning to the dark web, where hackers have reverse-engineered the top generative AI models and stripped them of all security guardrails.

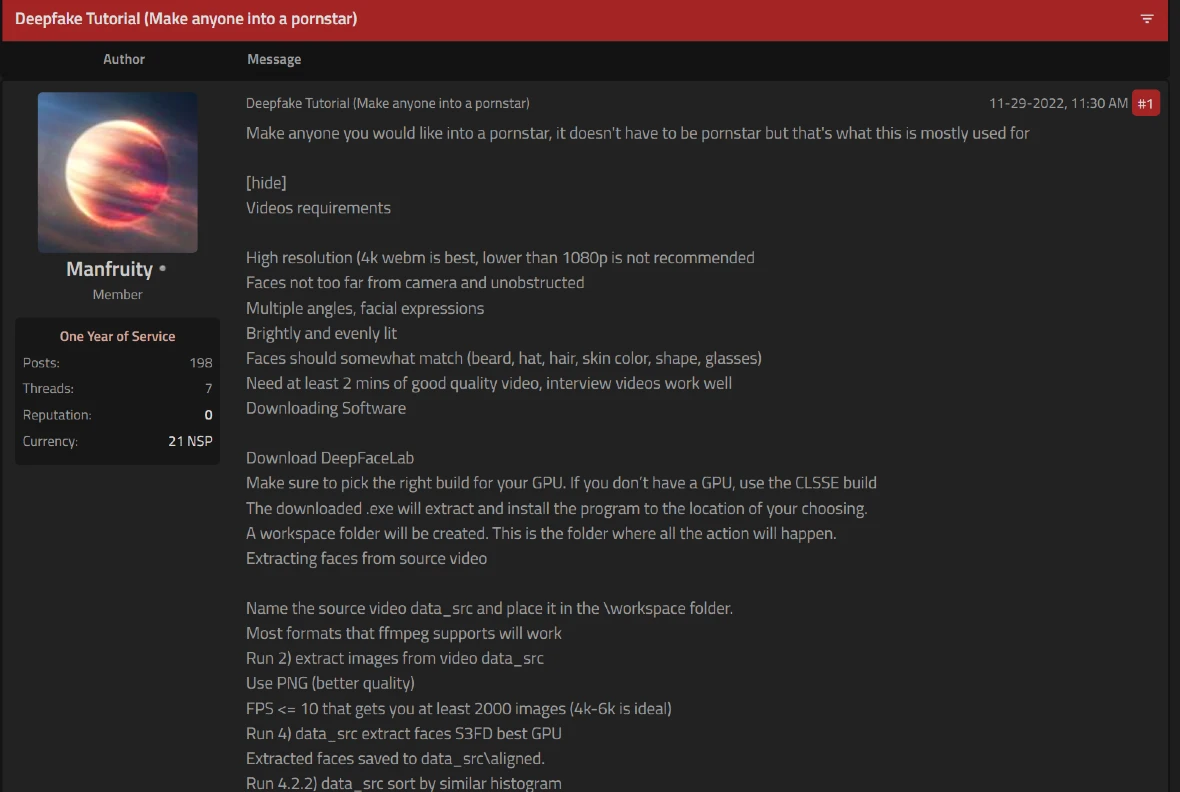

With these underground tools, the creation of deepfakes, while still requiring some tech skill, becomes more accessible. Additionally, legal deepfake technologies are also being heavily misused and abused by cybercriminals, including popular deepfake sites like DeepFaceLab. Guides and tutorials on how to create deepfakes are openly promoted in underground forums.

How to spot new deepfake attacks

We agree with the Hong Kong police when they say that the public should be made aware that deepfake technology has advanced to the point of being capable of transitioning from one-on-one attacks to multi-person deepfake tactics.

Local police recommended that workers ask questions during meetings, which could reveal if the meeting is fake and/or prerecorded. However, as mentioned, deepfake technology can also be developed for live conversations. So, how can you verify that the person you are talking to is who they say they are?

For businesses, our advice is to talk about deepfake threats with your IT and security team and consider deploying AI deepfake detection solutions. Workers and regular end users, who are the preferred targets of deepfake criminals, can consider several tips to help them stay safe.

If you know the person you are meeting with, ask them about something only both of you know.

Also, note that deepfake technology is still not perfect. There are certain things that deepfake tech struggles to get right. For example, pay close attention to hands, faces, and hair details. Do they look blurred at times? Do these features shimmer or appear pixelated, warped, or just off?

How about body language? Does it align with what the person is saying? Deepfakes sometimes fail to match movements with audio. You can also look into glitches in audio, speech patterns, and background noise.

Besides these valid tips, the best security action you can take is to verify the meeting or caller. For example, if your CEO or someone from your bank asks for an urgent video meeting or call, hang up and dial their phone number or contact the real person directly to confirm that they aren’t being impersonated.

You can also request additional verification. Ask, without warning, for alternative verification, such as showing ID or documents.

Final thoughts on the 2024 deepfake wave

Deepfakes hit the ground running in 2024. Recently, graphic sexual deepfake images of the famous singer Taylor Swift went viral, creating a scandal. Around the same time, US President Joe Biden was deepfaked in a robocall campaign just as the 2024 presidential race was heating up.

Deepfake attacks are evolving and increasing. Cybersecurity organizations call for better governance, accountability, enforcement actions, investigations, and new tools to detect deepfakes.

In this new threat environment, no single security tip is foolproof. Stay informed and always be cautious when it comes to your data and security. The person or persons on the other line may not be who they claim to be.