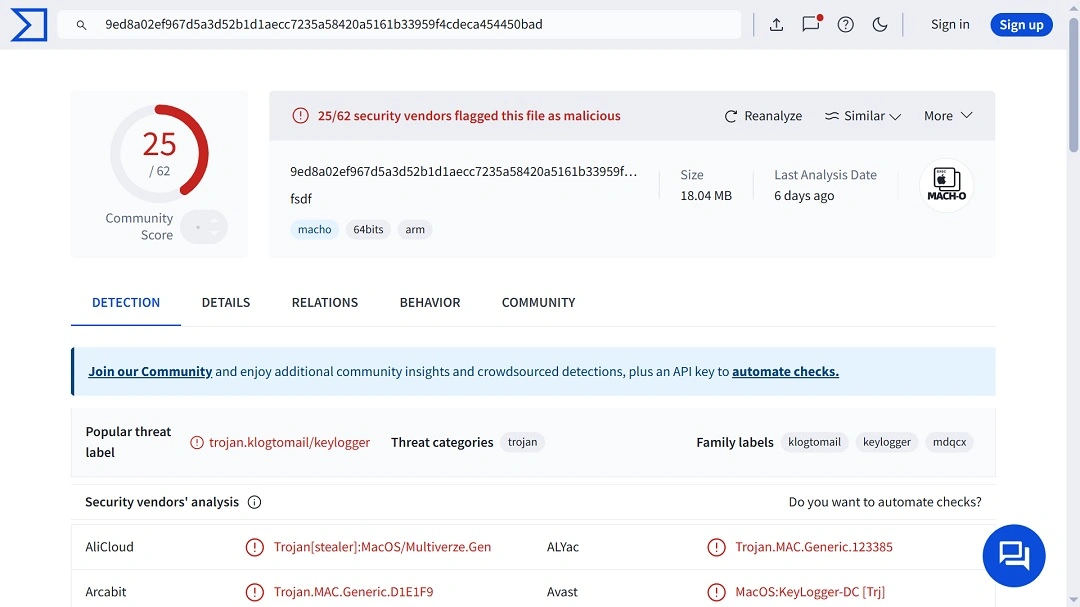

Moonlock Lab threat researchers have come across a rather unique piece of macOS malware. The malware sample was found as a MachO file — the file format used by macOS systems to install software.

On VirusTotal, the sample has been flagged as a trojan, a keylogger, and a Python stealer. While Moonlock Lab security experts continue their investigation, this malware could, in fact, be all of the above and much more. Let’s dive straight into it.

Moonlock Lab finds a unique malware sample that talks with ChatGPT

On March 5, Moonlock Lab revealed a newly discovered malware sample on X. Coded in Python and packed with PyInstaller — a common tool used to distribute malicious binaries — this sample seems to be an automated phishing bot malware.

Impressively, these simple coded Python lines, once running on macOS, can contact ChatGPT and ask it to generate a highly personalized phishing message. It does so by providing the app with the victim’s data, which it sources from libraries.

At scale, if malware like this were to infect hundreds of thousands of devices, it would have the potential to send out massive volumes of highly personalized phishing emails. This malware can also be used for spear phishing.

Some lines of the Python code in this sample are similar to code seen in phishing simulation tools. Red teams and IT teams use such tools to test their employees’ cybersecurity posture. However, the addition of victim data libraries, and the ability that the malware has to connect to OpenAI’s ChatGPT 4 through the API, make it unique.

Fully functional traits in the malware

One of the big questions when encountering malware samples is whether the malware is fully functional. There are several aspects that need to be considered to answer this question.

The code snippets shared by Moonlock Lab show that the malware is coded to target data (retrieving it from libraries). However, it doesn’t show where that target_data comes from. So, while the code may be correct, without the specific target libraries, it is not functional.

How it communicates with ChatGPT

Another way to know if this malware is functional is by looking at how it communicates with OpenAI’s ChatGPT API. Developers integrate this API into their software or apps to leverage AI by communicating with OpenAI’s model. So, let’s look at how this malware does that.

The malware uses the requests.post() function, HTTP POST requests in Python. This is standard and correct.

The code also provides the specific URL for the OpenAI API chat completions endpoint: https://api.openai.com/v1/chat/completions. This is the correct endpoint to interact with GPT-4 (or other chat models) and generate text.

It even specifies the GPT-4 model to use (gpt-4-1106-preview). It is correctly coded to include specific data in the prompt it sends to ChatGPT to generate phishing content and also handles how ChatGPT responds with the following code:

- response.json()[‘choices’][0][‘message’][‘content’].

The line of code above is designed to parse the JSON response from the OpenAI API and extract the generated phishing message.

So far, everything looks right on the money. But here comes another key.

The malware also has the ability to include headers=self.headers. Headers are where ChatGPT API keys are typically included. However, the malware sample does not seem to include the API keys themselves. The keys may be obfuscated, not yet included, or intentionally left blank to be sold under malware-as-a-service (MaaS) models.

Once again, while the malware can connect correctly with OpenAI’s ChatGPT 4 API, it may not be entirely functional, as the keys may not be there. Without a valid API key, the requests to OpenAI would be rejected.

Evasion, detection, and analysis obfuscation techniques

To really know if this sample is functional and capable of running in the wild, it needs to be examined live by cybersecurity experts while being executed (do not try this at home if you are not qualified).

And here is where things get even more interesting.

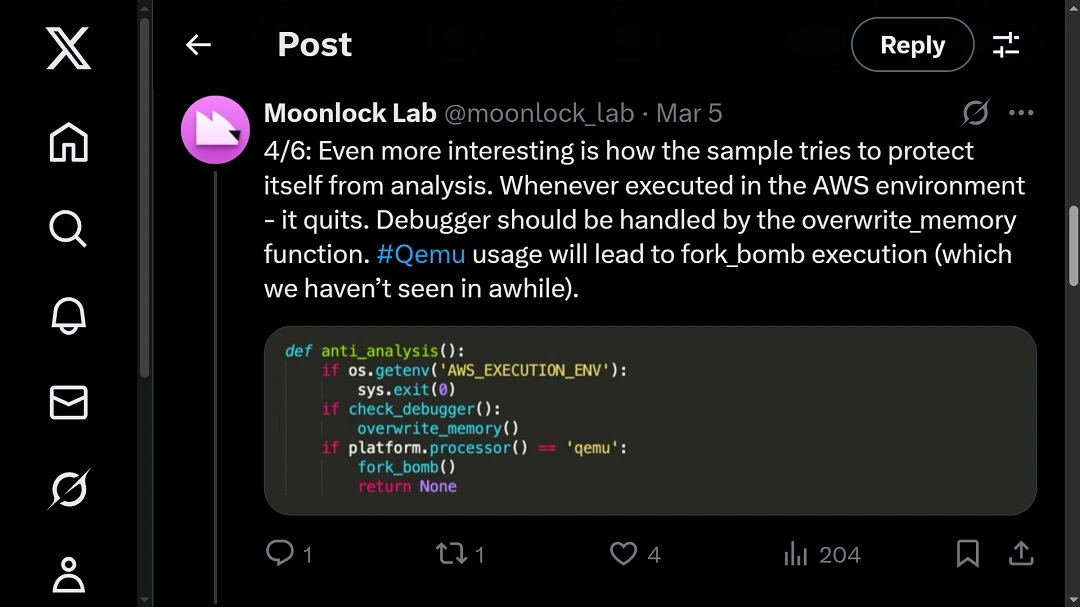

Moonlock Lab security researchers, still investigating this sample, said on X that the malware tries to protect itself from cybersecurity analysis.

“Whenever executed in the AWS environment — it quits. Debugger should be handled by the overwrite_memory function. #Qemu usage will lead to fork_bomb execution (which we haven’t seen in a while).”

A random-sleeper bot likely part of a wider botnet

Moonlock Lab experts believe — and all the initial evidence suggests — that the malware is a bot that is part of a botnet or a wider network. This bot also has other evasive tricks up its sleeve. Let’s look at one of its hacks.

OpenAI has mechanisms to detect and prevent abuse of its API. For example, to detect and shut down abuse, it will look at the number of requests being made and the intervals at which they are being made, and it will block any endpoint that behaves like a suspicious automated malicious bot.

But this malware seems to try to trick OpenAI’s anti-bot and anti-abuse security techniques by going to sleep for random intervals.

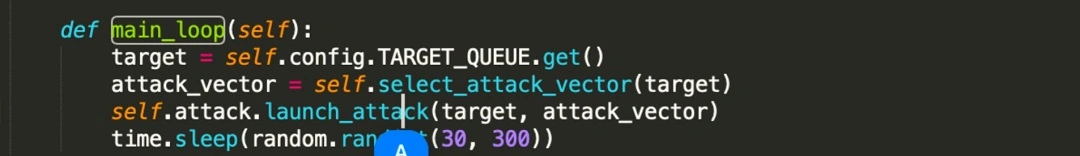

The code line time.sleep(random.ran(30, 300)) in malware, as seen in the image below, reveals how the bot is coded to sleep between attack executions, each time randomly choosing any value between 30 to 300 seconds.

Random sleep commands are used by cybercriminals to avoid triggering alarms. This makes it harder for security analysts to detect the malware’s behavior in real-time.

This may be the first time a random sleep command is used against an AI technology like OpenAI.

The full code breakdown for this evasion and deception technique is as follows:

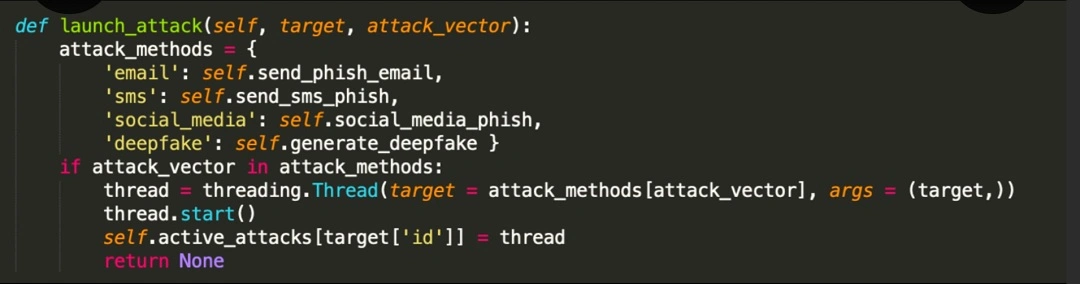

- def main_loop(self): This defines a function called main_loop, likely the core loop of the malware’s operation.

- target = self.config.TARGET_QUEUE.get(): This line retrieves a target from a queue, suggesting that the malware is designed to process multiple targets (victims).

- attack_vector = self.select_attack_vector(target): This selects an attack vector based on the target, implying that the malware might have different attack strategies.

- self.attack.launch_attack(target, attack_vector): This launches the attack against the target using the selected vector.

- time.sleep(random.ran(30, 300)): This is a crucial line. It pauses the execution of the malware for a random duration between 30 and 300 seconds (5 minutes).

At this pause/sleep rate, each bot could run a rough average of about 500 automated phishing attacks and messages per day.

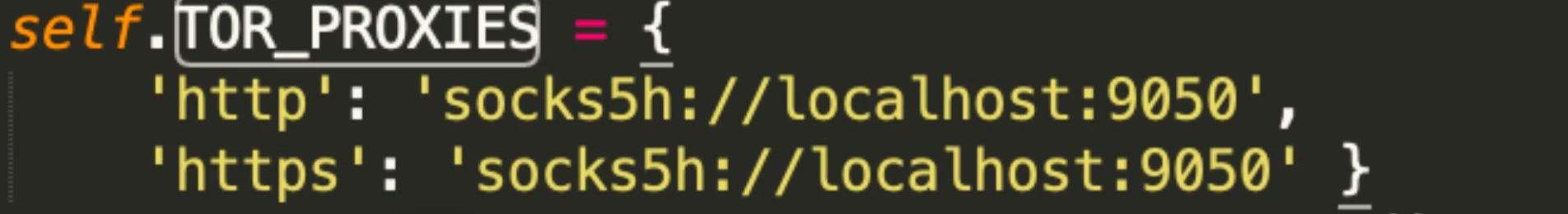

Using the decentralized Tor network to run attacks

The malware also uses the Tor network (not to be confused with the Tor Browser) to communicate with the API and run its attacks. A Mac infected with this bot would not need to have the Tor browser installed to be able to use the Tor network. However, it does need to have Tor software.

Based on the code snippets Moonlock Lab researchers have shared so far, there is no evidence that this bot code is capable of downloading the Tor software that this malware requires to run. However, the Tor software may come bundled into other modules of this malware, or included into the bigger toolkit we are yet to reveal.

Final thoughts

This malware sample stands out from the crowd. It is clear, concise, elegant, creative, and dangerously capable. The malware has traits similar to those of a gray hat hacker or an insider, users familiar with phishing simulations and red team techniques, and anyone who knows their way around OpenAI’s back-end mechanics.

This is an independent publication, and it has not been authorized, sponsored, or otherwise approved by Apple Inc. Mac and macOS are trademarks of Apple Inc.