Artificial intelligence has skyrocketed to become the most advanced technology of our time. But for most users, it’s a mysterious black box — the inner workings remain unseen. We type in a prompt, and out pops an impressive answer. This creates an illusion of trust, in which it is simply assumed that basic security and privacy concerns are being magically addressed. However, AI, like any human-made system, isn’t flawless. In fact, some AI systems have produced some real flops.

A serious bug allowed anyone to access and read your ChatGPT chats on Apple devices

On July 1, Pedro José Pereira Vieito, Lead Data Architect at ShopFully, released a post that sparked the first ChatGPT-Apple controversy.

Vieito made a shocking discovery, concluding that OpenAI’s ChatGPT app on macOS is not sandboxed. In fact, it stored all conversations in plain text in an unprotected location.

This rather technical statement implies a very serious privacy and security threat to Apple users. Apple, which has prided itself on running most AI processes directly on Mac computers, let OpenAI make a grave error.

In simple terms, by storing data locally instead of online, ChatGPT kept logs of everything that Apple users typed in and every response the AI gave. The data was stored in an unprotected folder as raw, plain text and could be accessed by anyone. The file that contained the user’s conversation was not even encrypted.

Furthermore, the ChatGPT app, unlike other third-party apps that work on Apple environments, was not sandboxed. Instead of being limited to a restricted environment, within which it could execute code and run commands, the app effectively had access to users’ entire systems.

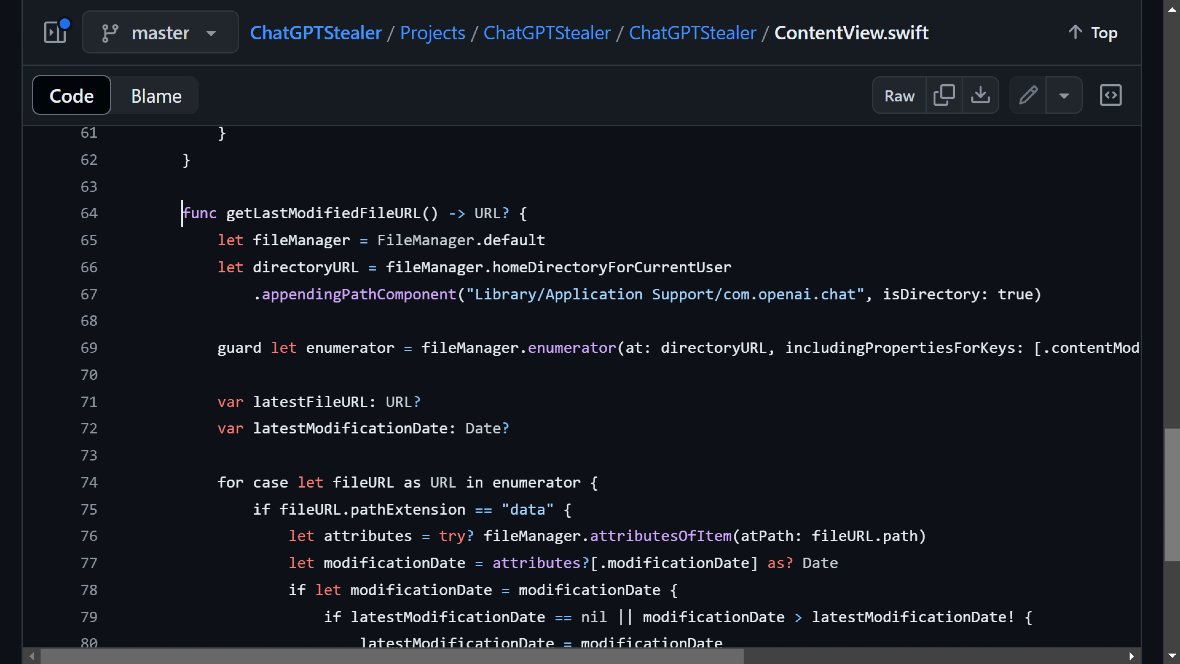

ChatGPT stored the unencrypted and non-sandboxed data in the following folder:

~/Library/Application Support/com.openai.chat/conversations-{uuid}/

OpenAI dismissed the bug, only to fix it once the news spread in the media

When Vieito discovered the security flaw, he reported it to OpenAI via their BugCrowd bug reporting program. However, OpenAI said the bug Vieito reported was “Not Applicable.” OpenAI downplayed the bug report, saying, “In order for an attacker to leverage this, they would need physical access to the victim’s device.”

In a blog post, Vieito disagreed with the conclusions that OpenAI had reached about the reported bug. In fact, it was OpenAI’s response that inspired Vieito to go public, revealing the bug on Threads.

“As I disagreed with that consideration, I decided to post this issue publicly on Threads and Mastodon to raise awareness and encourage OpenAI to fix this issue and hopefully sandbox the ChatGPT app on macOS,” Vieto said in his blog post.

To demonstrate, Vieto created a simple file grabber app capable of navigating to the folder where the raw data is stored and extracting it in real-time.

Vieito’s Threads post caught the attention of top technology media such as The Verge, Ars Technica, 9to5Mac, and others. From here, the news snowballed, putting pressure on OpenAI.

OpenAI patches the bug

Vieito explained how things developed from there. “Following these publications, OpenAI finally acknowledged the issue and released ChatGPT 1.2024.171 for Mac, which now encrypts the conversations. The conversations are now stored in a new location.”

The fact that OpenAI had opted out of the Mac sandboxing policy for third-party app providers, and the fact that ChatGPT was storing data in an unprotected folder, accessible to anyone and unencrypted, is as shocking as the way OpenAI decided to respond to a reported bug.

Considering that the vulnerability represented a serious privacy and security issue for Apple users, minimizing exploitation is, to say the least, highly unethical. One thing is certain: OpenAI’s actions did not align with Apple’s security and privacy frameworks.

ChatGPT now encrypts the data of chats and stores them in a new folder. However, the app is still not sandboxed. The data is now found at:

~/Library/Application Support/com.openai.chat/conversations-v2-{uuid}/

The files in this folder are now encrypted with a key named “com.openai.chat.conversations_v2_cache.” The key is stored in the macOS Keychain, and the old plain-text conversations are removed after upgrading to the new version.

Despite the improvement, Apple users should be aware that ChatGPT is still not sandboxed. This means that chats between the app and Apple users are still stored in an unprotected location.

Why is sandboxing important for Mac users?

Sandboxing is a fundamental security feature in macOS that safeguards user data and system integrity. It acts like a secure container, isolating each app and restricting its access to other parts of the system. This approach offers several key advantages for Mac users.

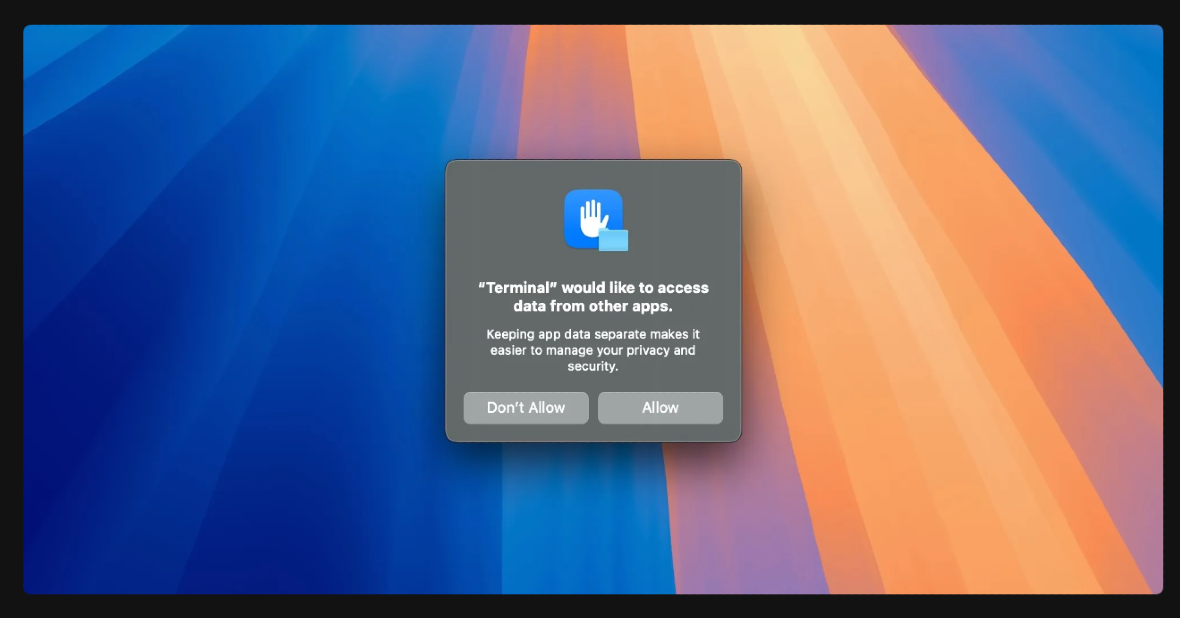

Without sandboxing, any non-malicious app could potentially access and tamper with sensitive data like user files, system settings, or even other apps. Sandboxing prevents this by limiting an app’s reach to its designated area, significantly reducing the potential damage caused by accidental bugs or vulnerabilities within the app itself.

Sandboxing is also a stronger defense against malware, as even if malware infects an app, the sandbox restricts its ability to spread or access critical system resources and user data. This creates a crucial layer of defense against cyberattacks. Additionally, it improves users’ control over their data.

On iPhone and iPad, all apps obtained from the App Store are sandboxed to provide the tightest controls. On Macs, many apps are obtained from the App Store, but Mac users can also download and use apps from the internet. This means that not all apps are sandboxed, especially older ones that lack this security measure.

However, a communication tool like ChatGPT should prioritize user privacy due to its highly sensitive nature.

“Unfortunately, OpenAI opted out of sandboxing the ChatGPT app on macOS and stored conversations in plain text in a non-protected location, disabling all these built-in defenses,” Vieito said. “This meant that any running app, process, or malware could read all your ChatGPT conversations without any permission prompt.”

Conclusion

The recent discovery regarding the ChatGPT app for macOS has exposed a critical vulnerability, raising concerns about users’ data security in the burgeoning field of AI.

Unlike most third-party apps, ChatGPT bypassed Apple’s sandboxing measures. This security lapse highlights the potential risks associated with AI development and the importance of prioritizing user privacy.

While OpenAI has since addressed the encryption issue, the app remains non-sandboxed. This serves as a wake-up call for Apple. The company that’s known for its high security and privacy standards is navigating into uncharted waters with AI, and user trust hangs in the balance.

The incident serves as a reminder that security shouldn’t be an afterthought but a core principle guiding the development and deployment of AI technologies.

This is an independent publication, and it has not been authorized, sponsored, or otherwise approved by Apple Inc. Mac and macOS are trademarks of Apple Inc.