From AI-driven deepfakes interfering in the US election to a new iOS trojan that steals biometric data from iPhone users to the Hong Kong deepfake scandal that cost a multinational $26 million, AI-driven deepfakes are on the rise.

Moonlock reached out to experts in biometrics and cybersecurity to get an inside view of how dangerous deepfake attacks are, how they are evolving, and what users can do to stay safe.

Why are deepfake attacks thriving?

As the world turns to biometrics, facial identification, and fingerprint identification to secure devices, systems, and accounts, cybercriminals are adapting. Bad actors are inevitably finding innovative ways to bypass the new safeguards imposed on them.

Biometric attacks were extremely difficult to carry out in the past. But now, thanks to the rise of generic AI, cybercriminals hold the upper hand. Some of these tools have the potential to compromise otherwise secure technology.

The meteoric rise of deepfakes

Onfido’s Identity Fraud Report 2024 reveals that deepfake fraud attempts spiked by 3,000% in just one year.

Jake Williams, former US National Security Agency (NSA) hacker and faculty member at IANS Research, spoke to Moonlock about the evolution of deepfake attacks.

“It is infinitely easier for the average person to create a deepfake today than it was even just a year ago,” Williams said. “A few years ago, anything that was even a remotely convincing deepfake took a lot of processing power and specialized video knowledge to make.”

“Today, it’s significantly easier, with multiple websites offering it as a service,” Williams added. “Of course, these still aren’t as convincing as processing with cutting-edge hardware. But most are passable if you aren’t paying close attention.”

Facing the reality of crime in a digital world

Moonlock Lab researchers highlighted a report by Europol updated in January 2024. According to the report, “The most worrying technological trend is the evolution and detection of deepfakes.”

The report concludes that law enforcement is challenged by the impact of deepfake technology. This includes the impact it has on crime, disinformation, document fraud, the work of law enforcement and policy, and legal processes.

“Deepfake-related attacks are becoming more common due to advancements in AI technology,” Moonlock experts said. “Many people are vulnerable to being deceived by deepfake content. As deepfake technology advances, it is likely that attackers will use it to create more realistic and convincing fakes that can target individuals, organizations, and even nations.”

The deepfake trend of image-based sexual abuse

Moonlock Lab stated that the rise in deepfakes is alarming, and we should expect the trend to continue.

Some potential scenarios include political disinformation, impersonating high-profile figures, or blackmailing victims with fabricated evidence. But image-based sexual abuse deepfake attacks represent one of the most alarming trends.

Recently, AI-generated, deepfaked pornographic images of singer Taylor Swift went viral.

Star Kashman, a cybersecurity and privacy law expert — whose work was recognized by the Office of the Director of National Intelligence (the governing body that oversees the CIA and NSA) — fights for victims of cybercrime such as cyberstalking, doxing, deepfakes, child sexual exploitation online, and cyber-harassment.

Speaking from her own experience, she warned that image-based sexual abuse is one of the many ways that deepfake attacks are becoming more and more common. It is vital to protect against this growing threat.

“The rapid evolution of AI-driven deepfakes poses a significant threat to both individual privacy and cybersecurity,” Kashman said. “Deepfake-related attacks are becoming more common as technology advances and AI becomes more common, and these attacks could expand in scope and sophistication in the near future.”

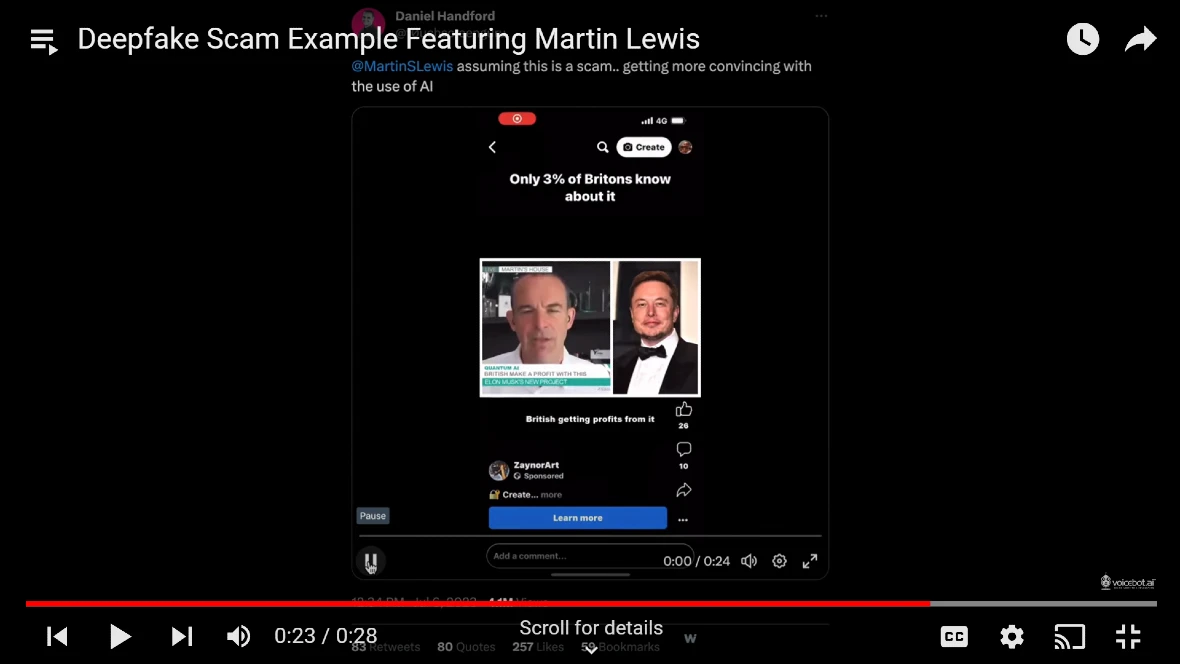

The inevitability of deepfake-as-a-service

Bitdefender has been sounding the alarm on deepfakes heavily since 2024. In addition to numerous reports, Bitdefender spoke to Moonlock about the issue.

Alina Bizga is a Security Analyst at Bitdefender. Bizga told Moonlock that threat actors are actively using deepfake technology to target organizations and consumers alike.

“While some of these attacks are sloppy fraud attempts, we expect cybercriminals to evolve their MO and fully capitalize on AI and machine learning to enhance their spear-phishing and business email compromise (BEC) attacks, phone scams, and identity crimes,” Bizga said.

Bizga warned that cybercriminals and organizations that are focused on the malware-as-a-service business could expand their underground business to provide deepfake-as-a-service options for less skilled cybercriminals. This would lead to a radical uptick in deepfake attacks as the tech becomes more widely available for bad actors.

The many ways criminals use deepfake technology

Deepfake technology, in the wrong hands, can be used for a lot more than just biometric attacks. The specialists interviewed in this report reveal just how creative criminals are getting with this disruptive innovation.

Moonlock Lab listed the many ways that deepfakes are being used criminally:

- Creating fake news and propaganda to influence public opinion, spread hateful content, cause panic, or incite violence

- Spoofing biometrics to bypass authentication systems

- Sabotaging competitors, rivals, or enemies by damaging their reputation, credibility, or relationships

- Manipulating market trends, stock prices, or business decisions by spreading false or misleading information

- Launching social engineering attacks where individuals play a key role (for instance, attackers may use deepfakes to impersonate individuals and manipulate victims into transferring money or revealing sensitive information)

- Creating fake medical records or diagnoses or synthesizing realistic images of medical scans, such as CT, MRI, or X-ray, to show the presence or absence of tumors, lesions, or other abnormalities (this can be used for insurance fraud or manipulating clinical trials or research outcomes)

The sky’s the limit: Cyberwarfare, virtual kidnapping, scams, and theft

When questioned about what criminals use deepfake technologies for, Bizga from Bitdefender said that “the sky’s the limit.”

Bizga added that cybercriminals use advanced artificial intelligence and machine learning technologies in all their social engineering schemes. They also use it to blackmail individuals by generating fake materials, falsely incriminate victims, infiltrate businesses and organizations, conduct virtual kidnapping, and pull off scams that maximize identity theft crimes.

Shawn Waldman, founder and CEO of Secure Cyber Defense, added cyberwarfare to the list of deepfake uses.

“We’ve seen deepfake technology used in war to spread disinformation campaigns, aiming to sway public opinion and/or to get soldiers to surrender their position,” Waldman said.

Waldman also highlighted how deepfake criminals go after the most vulnerable victims. “We’re also seeing and hearing about deepfake attacks that are specifically targeting the elderly,” Waldan explained. “We’ve seen many cases that involve deepfake audio recordings pretending to be a grandson or relative who is in trouble and asking for money. Because these recordings sound just like their relatives, they have been scammed out of tons of money.”

Kashman agreed. “Deepfake creation could be used to mimic voices to conduct scam phone calls to steal money, or could be used to abuse young women by plastering their face across pornographic content they did not participate in.”

Fighting back against deepfake attacks with technology

The spread and emergence of generative AI has left many sectors playing catch-up. And while criminals are fast to learn, the cybersecurity industry is scrambling to keep up. Experts are working to roll out the software, apps, and tools necessary for the public and organizations to protect themselves.

Security solutions combating deepfake scams

Moonlock Lab researchers state that biometric manufacturers and technology companies are all hard at work building various security solutions to detect deepfake calls, videos, and meetings.

“These solutions incorporate advanced algorithms that analyze facial and voice patterns to identify inconsistencies indicative of manipulation,” Moonlock experts said. “One such mechanism is the Phoneme-Viseme Mismatch technique, developed by researchers from Stanford University and the University of California. This technique compares the movement of the mouth (visemes) with the spoken words (phonemes) and detects mismatches that indicate a deepfake video.”

Kashman agreed that advanced detection algorithms and programs and cross-referencing methods can be used to analyze the authenticity of the content. Kashman also recognized that there are some applications coming out to detect AI-created work. However, new apps are not necessarily reliant enough to be fully trusted yet.

“These technologies are so new that it is hard to determine how reliable they are,” Kashman added. “These security solutions intend to identify inconsistencies and anomalies that may suggest content has been manipulated. For instance, detection tools could analyze the rate at which someone blinks in a video, or subtle texture-related details displayed in a digital image that are typically thrown off in the creation of deepfakes.”

Tools in the fight against deepfakes

Bizga explained that threat actors have the upper hand when it comes to exploiting technological innovations and AI. This tends to keep organizations on the defensive. However, Bizga highlighted how traditional security measures, combined with AI-based threat detection, can be used to prevent deepfake attacks.

“Organization leaders who have recognized the severe business risks that deepfakes pose are scaling their security measures to adopt zero-trust IT security models, employ AI-based threat and anomaly detection technologies, and implement digital verification tools.”

Waldman also agreed that new deepfake security technology is still being developed. But Waldman highlighted the work of a new startup called Clarity, which is developing ways to detect deepfake videos. Another standout is Perception Point, a company that developed a new AI email detection technology.

According to Waldman, the world is still in the infancy stage when it comes to the detection of deepfakes and AI-generated content.

Injection attacks challenging the industry

Traditional attacks on biometric systems consisted of simple tactics such as “masking.” But today, biometric security is being compromised by injection attacks, where attackers inject AI-driven deepfake data directly into the system, requesting verification and deciding approval.

In February 2024, Gartner predicted that by 2026, 30% of enterprises will consider identification verification and authentication solutions unreliable due to AI-generated deepfakes.

Gartner research adds that injection attacks increased by 200% in 2023. “Preventing such attacks will require a combination of presentation attack detection (PAD), injection attack detection (IAD), and image inspection,” Gartner writes in its report.

Bizga from Bitdefender explained that mitigating injection attacks can be very difficult. The process requires the use of dedicated AI and algorithms that can detect digital artifacts invisible to the human eye.

Big tech, open-source tools, and live AI detection on the firewall

In contrast, Waldam from Secure Cyber Defense believes that popular platforms and companies like Zoom, Microsoft Teams, and Google Meet are scrambling to license or develop the technology necessary to detect and shut down deepfake injection attacks.

“But I’ve not seen anything developed as of yet,” Waldman added. “There are a lot of open-source and startup solutions, but nothing so mainstream that it’s mature enough to license and put into place as of yet. “

Waldam added that companies like Microsoft and Intel, just to name a few, already have cutting-edge technology that can detect if a photo or video is real. But these tools only work by uploading the suspicious content.

Waldam warned that real-time event deepfake security technologies are different from those that require manual uploads.

The call for a new multilayer approach to deepfake security

Moonlock Lab said solutions like IDLive Face Plus can be very helpful against injection attacks. The tool detects injection attacks using virtual and external cameras. It then prevents browser JavaScript code modifications and protects against emulators and cloning apps.

Experts have also called for ethical and legal guidelines and regulations for the responsible use and creation of AI-generated content.

“Addressing the new wave of deepfakes requires a multifaceted solution,” Moonlock researchers said. “It might involve a complex sequence of security features, like a combination of several security features that sequentially verify the video source (its origin), assess its quality (the likelihood of it being generated by AI, possibly using a trained model on typical human movements to recognize atypical ones), and consider other supplementary factors.”

Bitdefender agreed that a multilayered security approach was necessary. “Current security solutions that only rely on antiviruses are not enough to protect against scams and cyberattacks that leverage deepfake technology,” Bizga said. “Combating this AI-driven wave of attacks requires a multilayered approach that involves awareness and education of the public, developing a new generation of tools that employ deep learning techniques and AI to tackle the threats, and setting strict deepfakes and synthetic media regulations and reporting systems.”

How can users protect themselves against deepfakes?

As Williams explained, there are some solid deepfake upload detection technologies. Examples include those developed by Microsoft and Intel. But for end users who do not access these types of tools, things can be very challenging. For now, much of the work to keep safe against deepfakes depends on the user.

“First, ask yourself, does this make sense, and is it being shared by a reputable source?” Williams advised users. “Looking for aliasing (blurry artifacts) where images are blended is a great method for detecting many face-swap style deepfakes. But as deepfakes get better and better, detection will get harder, so this method will eventually cease to work.”

Williams added that the best deepfake detector for humans is time. Given enough attention, any suspected fake will be run through a commercial tool and/or analyzed by experts to determine its authenticity.

Advocating for stronger privacy

Kashman added that users trying to spot deepfake videos should look for inconsistencies in lighting and movement. Glitchy imaging, shadows, or unnatural facial and body movements are further clues.

“To protect biometric data, users should be cautious about where and how they share personal information,” Kashman said. “It is unfortunate, however, because it appears that we are shifting into a reality in which we can no longer opt out of the collection of biometric data.”

“I think the best chance of protecting our biometric data comes with advocating for better privacy protections within the United States. We are far behind, and our data is being exploited beyond measure. We must create public discussion about these issues and urge the government to advocate for a better right to privacy in the digital age.”

Fighting hyper-realistic deepfakes

Bizga said that the tech has gotten to the point that it can be used to convincingly manipulate content. The results can be extremely realistic. These new kinds of deepfakes have fewer errors, such as blurring, blinking, or glitches.

“This is why users need to carefully research the video’s narrative and exercise extreme caution when dealing with too-good-to-be-true promises or requests for money and personal information,” Bizga said.

Bitdefender developed Scamio (a free tool) to help less savvy individuals spot deepfake scams. The technology is a next-gen AI scam detector. It helps users immediately tell if online content or messages they are interacting with are attempts to scam them.

The SIFT model

Waldan spoke about the SIFT model, a strategy that can help users differentiate deepfake videos from authentic footage.

SIFT stands for:

- Stop

- Investigate the source

- Find better coverage

- Trace the original context

“This is really just a fancy way of saying slow down!” Waldman said. “If it seems too good to be true, then listen to your gut. I recently used this technique when I was watching a clip on X of a Department of Defense press conference. It sure looked like someone had dubbed audio that wasn’t real. I was able to find the official video of the press conference on YouTube to validate what I was seeing and hearing.”

Final thoughts and security tips for users

The most secure methods we currently have to validate and authenticate people around the world are at a dangerous crossroads. AI has stepped into this game, giving bad actors a massive advantage.

A world where your fingerprints, ID, documents, videos, or images can be digitally altered to commit crimes — a world where you receive a realistic fake phone call from your boss, where criminals set up deepfake video meetings, post explicit fake content or run virtual kidnapping schemes, and prey on the people for political or financial reasons — is a world where something has gone incredibly wrong.

Moonlock expects new AI security solutions to emerge as a response to the rise in deepfake attacks, driven by leaders of the tech industry. These tools will become more accessible to users.

Such tools will eventually be implemented in firewalls, creating live real-time protection against deepfake content and attacks. However, these tools are not yet available to the general public. Therefore, we end this report with actionable steps you can take today to protect yourself.

Actionable steps users can take today

Moonlock Lab experts left us with 10 actionable tips that you can use to protect your data against deepfake attacks and better spot them in the wild:

- Rely on multi-factor authentication (MFA).

- Regularly refresh biometric templates to prevent misuse.

- Restrict who can see or access your photos, videos, and other personal data online or offline.

- Report or delete any fake content that uses your biometric data.

- Raise awareness about deepfakes and be vigilant.

- Check the source and authenticity of the video and its author.

- Pay attention to unnatural movements, odd facial expressions, or lip-sync issues.

- Consider the context in which a piece of content was shared. Hackers may try to manipulate you into sharing information that you shouldn’t share.

- Compare the video with other sources or versions of the same event.

- Use online tools or applications that can help detect or analyze deepfake videos.

Whether we like it or not, deepfakes are here to stay. And, like any new technology, they can and will be used by malicious actors. The question is whether cybersecurity professionals can keep up and combat deepfakes as effectively as cybercriminals utilize them.