As high-profile ransomware and state-sponsored cyberattacks take center stage in the global threat landscape, another type of threat is flying under the radar and on the rise: insider threats.

Using sophisticated social engineering techniques, malicious hackers are applying for IT jobs in valued companies. And, thanks to new technologies, they’re actually getting hired. These bad actors pass background checks with stolen identities and use deepfake technology and AI to land positions. Once they are hired, the damage begins.

To get the latest information on insider threats and how criminals use new tech, Moonlock sat down with experts to talk about the dangers of remote hiring and what needs to change.

Malicious insider threats: A trend on the rise

As Moonlock recently reported, KnowBe4 — a cybersecurity training and phishing company — made headlines for shutting down a hacker who had used AI and deepfakes to get hired by the company.

KnowBe4 stopped the attacker as soon as he began loading malware onto the Mac workstation provided by the company. However, not all businesses have the cybersecurity experience, resources, and expertise of KnowBe4. More concerningly, this attack is not an isolated incident.

Just weeks after the KnowBe4 incident, a man from Nashville was arrested for helping North Korean remote workers get IT jobs. Matthew Isaac Knoot, 38, allegedly assisted North Korean IT workers in getting hired by US and British companies under false identities.

Putting a number on global insider threat attacks

Undoubtedly, malicious insider threats are a growing trend. However, it is extremely challenging to estimate how many of these attacks happen every year around the world.

Malicious insider threats are not tracked like ransomware attacks and DDoS. Additionally, many go undetected or unreported. Still, based on available information, experts believe 3 out of 10 cyberattacks are linked to insider threats.

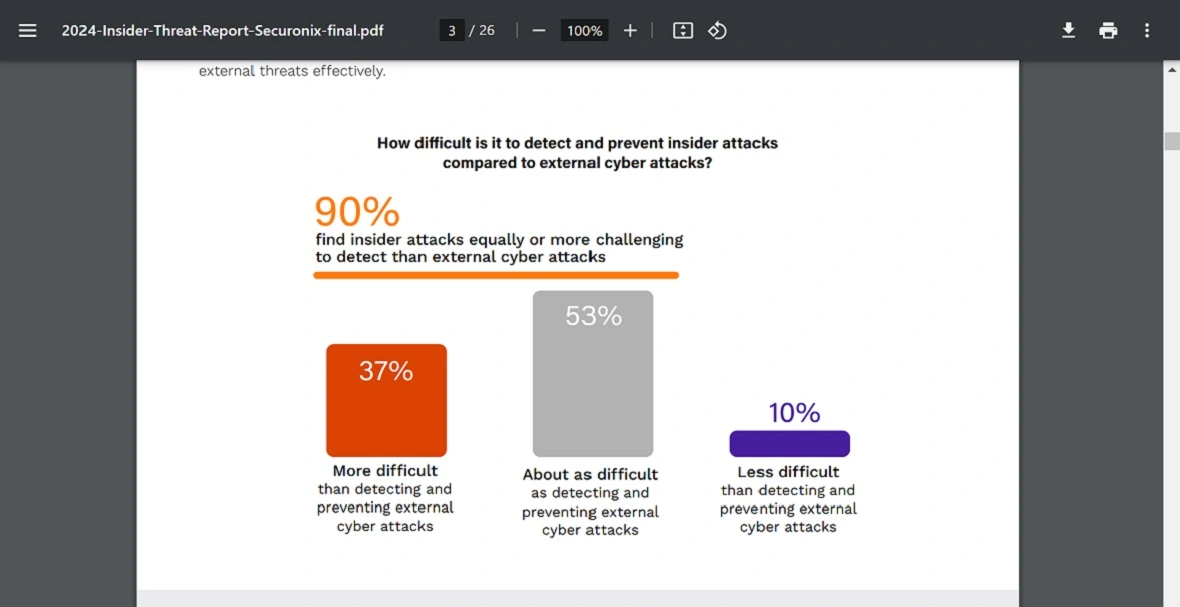

In 2024, Securonix found a marked increase in concern for malicious insiders, rising from 60% in 2019 to 74% in 2024. The report revealed that 90% of companies said insider attacks are equally or more challenging to detect than external attacks. A majority of companies (75%) worry about the impact that emerging technologies like AI have on this trend.

The US Department of State, along with the Department of Treasury and Department of Justice, has been warning since 2022 that thousands of workers linked to North Korea are applying for IT jobs, from low-level positions to advanced engineering roles.

The rise of state-sponsored remote workers linked North Korea

These thousands of workers do the job they were hired to do and send the money back to their government. The government, in turn, uses those funds for sanctioned military and defense programs, including the development of weapons of mass destruction and ballistic missiles. The Department of Justice estimates that workers bring in $300 million every year under this criminal model.

However, IT workers linked to North Korea don’t always stop there. If they are hired by a company of value, they sometimes run cyberespionage campaigns. They may load malware or spyware onto the business’s system.

Aaron Painter, CEO of Nametag, an identity verification platform, spoke to Moonlock about the issue.

“The FBI has been warning us for years about North Korean spies posing as IT workers,” Painter said. “Now that nation-state threat actors can use freely available generative AI tools, including deepfakes, to very successfully fool their way through interviews and employment checks, enterprise leaders need to think about implementing even stricter identity verification measures during the interview process.”

Painter warned that HR teams alone cannot be responsible for verifying the identity of new hires. IT teams must know who is gaining access to a network.

“We’re increasingly seeing identity verification occur when new account credentials are issued to keep accounts and corporate infrastructure protected,” Painter said. “The same should apply to hiring. Since we’re living in an era of AI deepfakes, vetting strategies for remote workers need to be completely revamped.”

Since we’re living in an era of AI deepfakes, vetting strategies for remote workers need to be completely revamped.

Aaron Painter, CEO of Nametag

The damage insider threats can cause

North Korean hackers are just the tip of the iceberg. In 2023, the Ponemon Institute reported that 71% of companies were experiencing “between 21 and more than 40” insider threat incidents every year. This represented an increase from 67% in 2022. And while some of these insider threats are linked to human error, 1 in 4 represents a malicious insider attack.

This trend challenges organizations in new ways, as they face increasing costs to respond to these types of security incidents.

Insider threats take up an average of 86 days to contain, and total costs have risen from $8.3 million in 2018 to $16.2 million in 2023, according to the Ponemon Institute.

Outsider threats vs. insider threats

While outsider attacks can be tackled through standard or known security measures, insider threats are more challenging to predict. An insider has access to an organization’s sensitive information on a regular basis. Plus, they may know exactly how that information is protected.

CISA breaks down the many motives behind bad actors from all over the world attempting to get hired by IT companies. These motives include:

- Espionage

- Terrorism

- Unauthorized disclosure of information

- Corruption, including participation in transnational organized crime

- Sabotage

- Workplace violence

- Intentional or unintentional loss or degradation of departmental resources or capabilities

IBM recognizes that, despite not grabbing as many headlines as external attacks, insider threats can be even more costly and dangerous.

The IBM Cost of a Data Breach Report 2023 found that breaches initiated by malicious insiders were the most costly of all, with damages set at approximately $4.90 million on average per attack. That’s 9.5% higher than the $4.45 million cost of the average external attack data breach.

Additionally, Verizon found that the amount of compromised data is also higher when insiders gain access to a system. External threats, on average, breach 200 million records. But insiders have exposed 1 billion records or more.

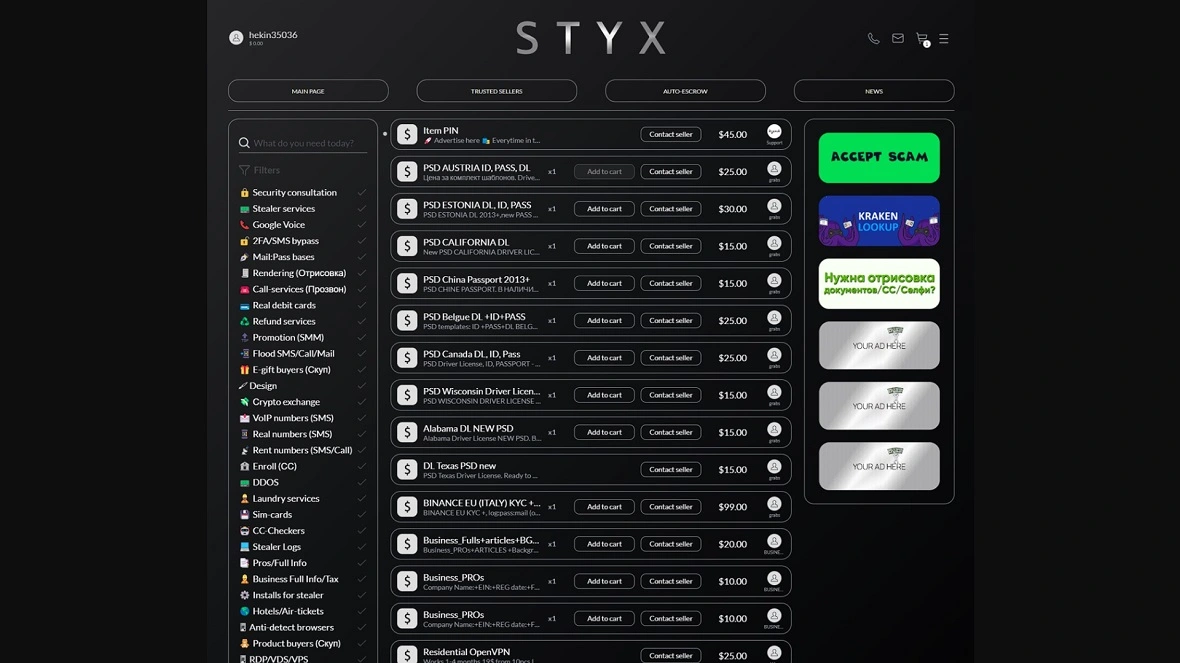

The new criminal toolbox to get hired: GenAI, deepfakes, and identify theft

Hackers can leverage a potent combination of generative AI, identity theft, and deepfakes to infiltrate organizations. By stealing personal information, they can create convincing synthetic identities. These fake personas are then enhanced with deepfakes, crafting highly realistic digital representations.

With a stolen identity and a convincing digital façade, hackers can apply for jobs, often passing background checks with ease. Once hired, they can access sensitive data, manipulate systems, and commit corporate espionage. The sophistication of these attacks underscores the importance of remote work security in response to the growing challenge of maintaining cybersecurity in an increasingly digital world.

Edward Tian, CEO of GPTZero, the popular AI detector platform, spoke to Moonlock about how generative AI is changing the playing field.

“It has become incredibly common for people to use AI when applying for jobs — whether they are legitimate candidates or not,” Tian said.

“Many companies receive resumes, cover letters, and application questions that have been generated with AI,” Tian added. “It can be practically impossible to determine which candidates are real and which are scams.”

Remote hiring security solutions and tips for organizations

Matthew Franzyshen is Business Development Manager at Ascendant Technologies, an IT company offering outsourced IT solutions to businesses for over 30 years. Franzyshen spoke to Moonlock about how bad actors leverage new technologies.

“Some candidates are still able to pass the advanced screening processes in place despite using fabricated documents or overly exaggerated resumes,” Franzyshen said. “To reduce these dangers, businesses must be alert and update their verification methods frequently.”

As Franzyshen explained, malicious insider threats put businesses at significant risk. This is especially true when it comes to breaches of confidential information that could have a catastrophic impact.

“Critical data, including customers’ private data or trade secrets, could be accessed and leaked by the malicious actor,” Franzyshen said. “This ruins trust and may have serious financial impacts as well as legal consequences.”

Following such breaches, there are frequently extensive legal disputes, regulatory penalties, and substantial losses of customer trust that may take years to recover from.

How to respond to the risk of insider threats

Despite the concerning trend of insider attacks, Franzyshen said that even smaller businesses without state-of-the-art security capabilities can protect themselves when hiring remote employees and freelancers.

“Insisting on video interviews is one highly effective tactic,” Franzyshen said. “This strategy not only puts a face to the name, making it easier to confirm a candidate’s legitimacy, but it also offers a great chance to evaluate their professionalism and communication skills.”

Franzyshen added that it can also be helpful to introduce trial periods prior to full-time employment contracts. A probationary period makes it possible to assess a candidate’s compliance with security requirements in-depth, resulting in a more educated and confident hiring decision down the road.

An urgent call for companies to update their HR technology and processes

Today, criminals have the resources to inject false data highly efficiently. As such, Aaron Painter from Nametag warned that HR and hiring teams should not always trust what they see.

“A foreign spy can simply switch their video source to an emulator streaming live deepfake video,” Painter said.

So, what is the solution?

“Instead of trying to detect deepfakes, enterprises need to skip a step and equip their hiring and IT teams with sophisticated identity verification tools to prevent digital injection attacks entirely,” Painter said.

Failing to update hiring processes and deploy anti-AI and deepfake recognition technologies can have devastating consequences.

“Once a threat actor has inside access to your systems, they can steal data, deploy ransomware, and cripple your entire business,” Painter concluded.

How companies can adapt their onboarding policies

Lucas Botzen, HR Expert and CEO of Rivermate, a global hiring company, told Moonlock that GenAI has become a dangerous tool in the wrong hands. These resources allow anyone to create convincing fake documents and profiles. Entire identities that can mislead employees and bypass traditional system checks and verifications can be created with AI.

“Such AI-generated resumes and LinkedIn profiles would not raise any flags while going through automated screening processes,” said Botzen.

Criminals and bad actors will fabricate work experience, educational credentials, and professional references. And all of these elements will appear to be legit, thanks to AI.

“In addition, AI may create credible phishing emails targeting employees, aiming at deceiving them into releasing sensitive information or allowing system access,” Botzen said.

Traditional methods of verification — cross-referencing with other sources or reliance upon human intuition — are, in most cases, quite inadequate against these deceptions.

“This raises the need for advanced verification mechanisms and follow-up monitoring to ensure that whatever seems legitimate is indeed original,” said Botzen.

What are the red flags that may signal a potential insider threat?

Botzen advised companies to do organized background checks. Organizations should also deploy AI-run tools that can trace probable anomalies in candidates’ profiles and ensure that onboard procedures include security processes.

“The stakes are far too high not to entertain the idea of insider threats when it can be devastatingly expensive — in both monetary and reputational cost — for a breach to occur,” Botzen said.

Additionally, third-party services specializing in identity verification and fraud detection, implementation of 2FA or MFA for all access points into the system, and clear policies and security procedures regarding remote workers are proactive measures that can help small businesses significantly reduce the risks.

According to Botzen, red flags include:

- A candidate who seems overly secretive

- A candidate who is averse to sharing details of their work

- An applicant who shows unusual interest in accessing sensitive information

- An employee who bypasses security measures

- An employee who neglects security best practices

- Anyone who shares passwords and does not use 2FA or MFA

- An employee downloading unauthorized software

- Coworkers reporting suspicious situations or inconsistencies

“The rise of generative AI and deepfake technology has forced significant changes in HR, security, and IT processes, most notably around recruiting,” Botzen said.

Change is needed to mitigate the risks of modern technologies

In conclusion, the convergence of generative AI, identity theft, and deepfake technology has created a sophisticated threat landscape that organizations must urgently address.

To safeguard against insider threats, a comprehensive and multi-layered approach is essential. HR departments must overhaul hiring processes to include robust identity verification, such as biometric checks and third-party credential validation. Security teams should collaborate with HR to provide extensive security training for new hires, emphasizing the risks posed by deepfakes and AI-generated content.

IT departments must deploy advanced monitoring tools to detect suspicious activities and implement stringent controls over network access. Additionally, the use of AI-driven tools to analyze video interviews and the thorough vetting of remote employees, devices, and digital footprints are critical components of a robust defense strategy.

By adopting these measures, organizations can significantly reduce their vulnerability to insider threats driven by generative AI.